Weekend Project: 3D Physarum and Volume Pathtracing

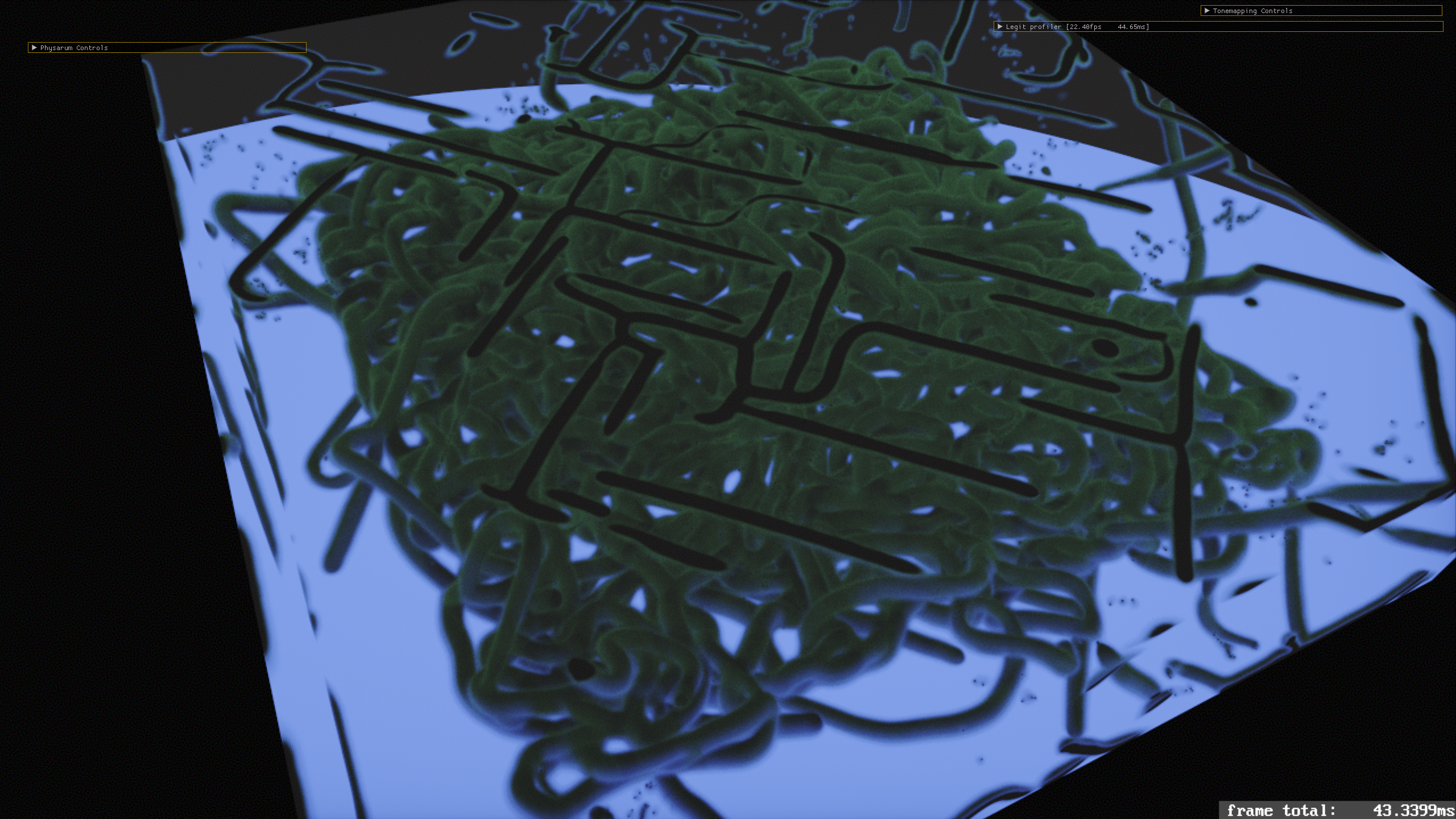

This is something I've wanted to do for quite some time. I have done a 2D and a sort of 2.5D version of the physarum sim, and I have been thinking for quite some time about implementing a 3D version. I had a partial implementation that I ended up scrapping a lot of, reimplementing it over Labor Day weekend.

Volume Pathtracing

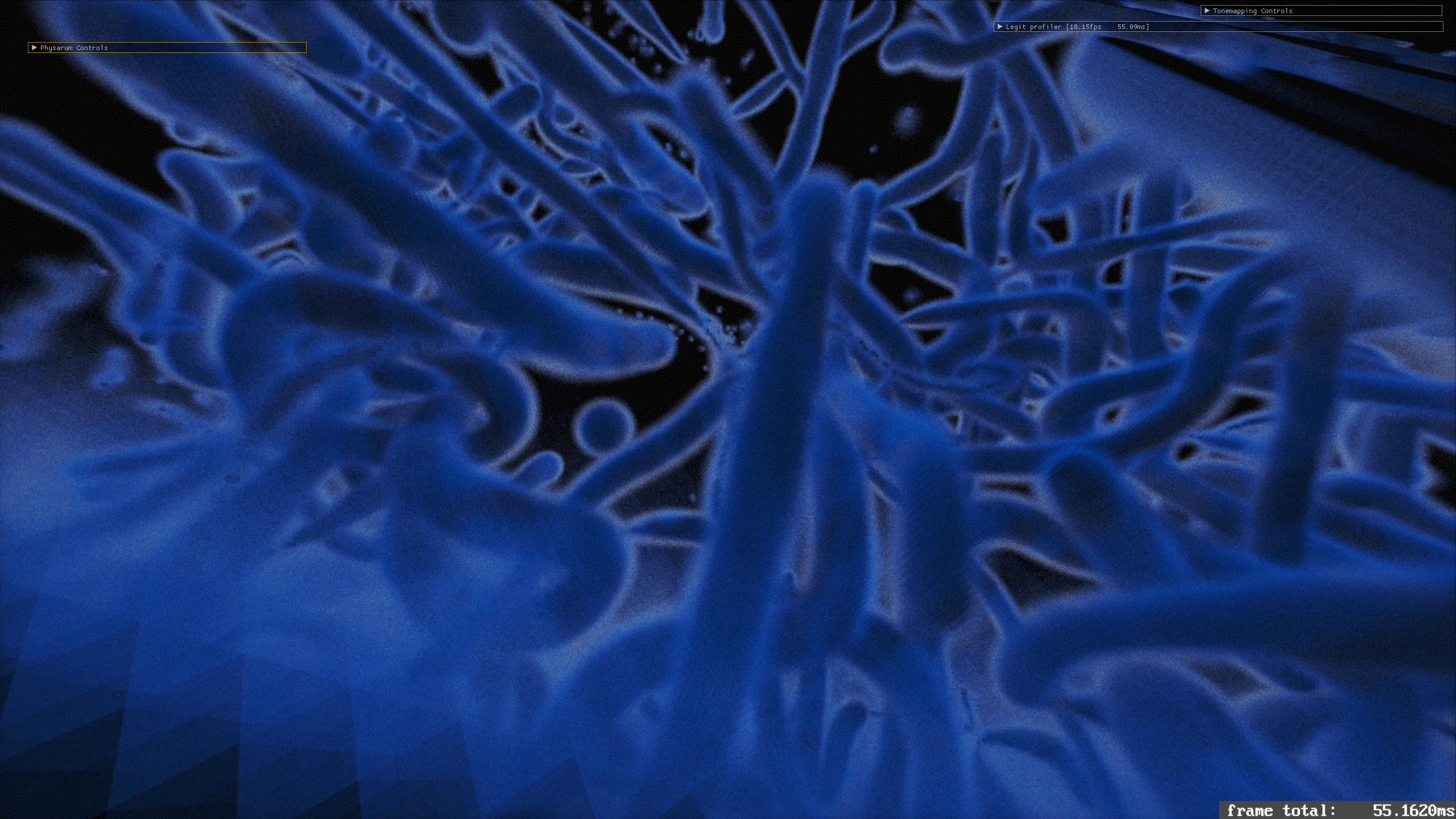

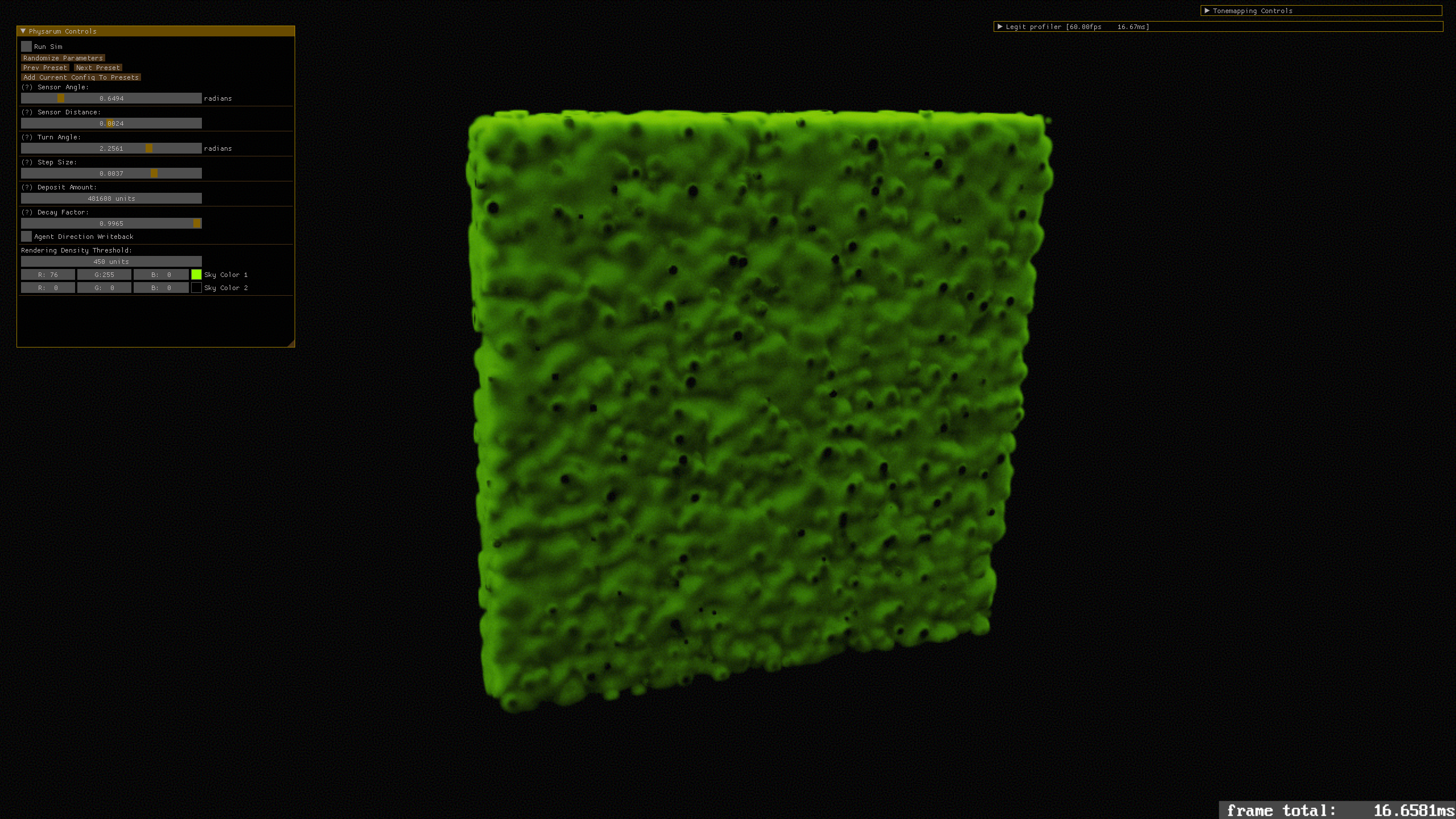

This is a rendering algorithm that has some similarities with the uniform volume material presented in Raytracing in The Next Week... but it does differ in a couple of ways. Firstly, instead of being a uniform volume, where you assume that all points within the volume exist with the same density, I am using a 32-bit uint 3D texture to hold the state of this sim. Some of the volumes here are as large as 1024x1024x256. I can divide this uint value by some large constant to get a floating point density value for each texel in that volume.

So that's the starting point for the algorithm. Once we have that setup - generating camera rays from the viewer position, towards a box containing the volume. If the ray hits the volume, it begins a traversal process. This is a DDA traversal across that texture containing the density - at each step, we generate a random number in the range 0 to 1. Comparing that number to the density value, we can make a decision: does the ray scatter? If the generated number is less than the density, the answer is yes. This maps to denser volumes being more likely to scatter, which seems physically plausible.

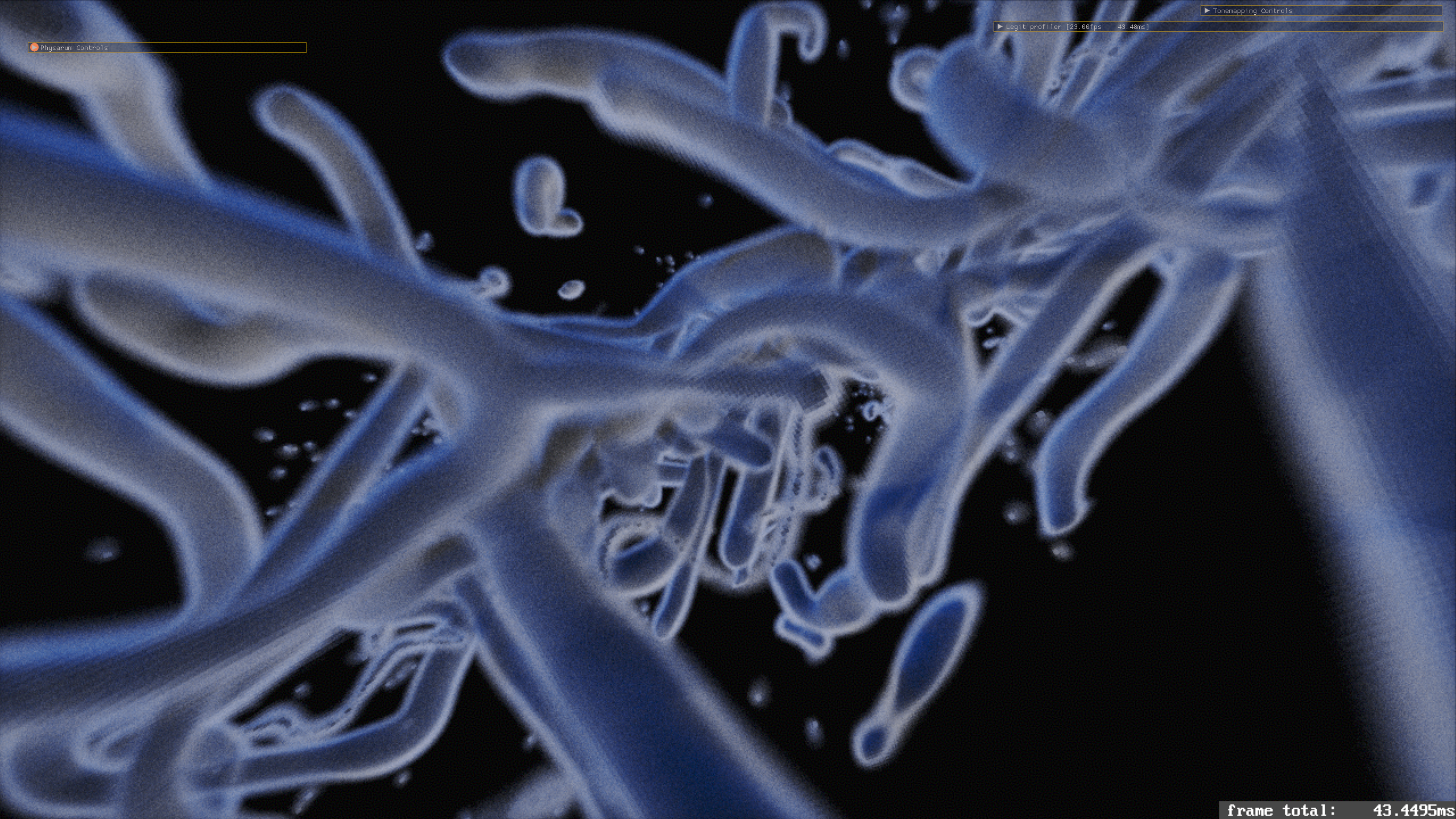

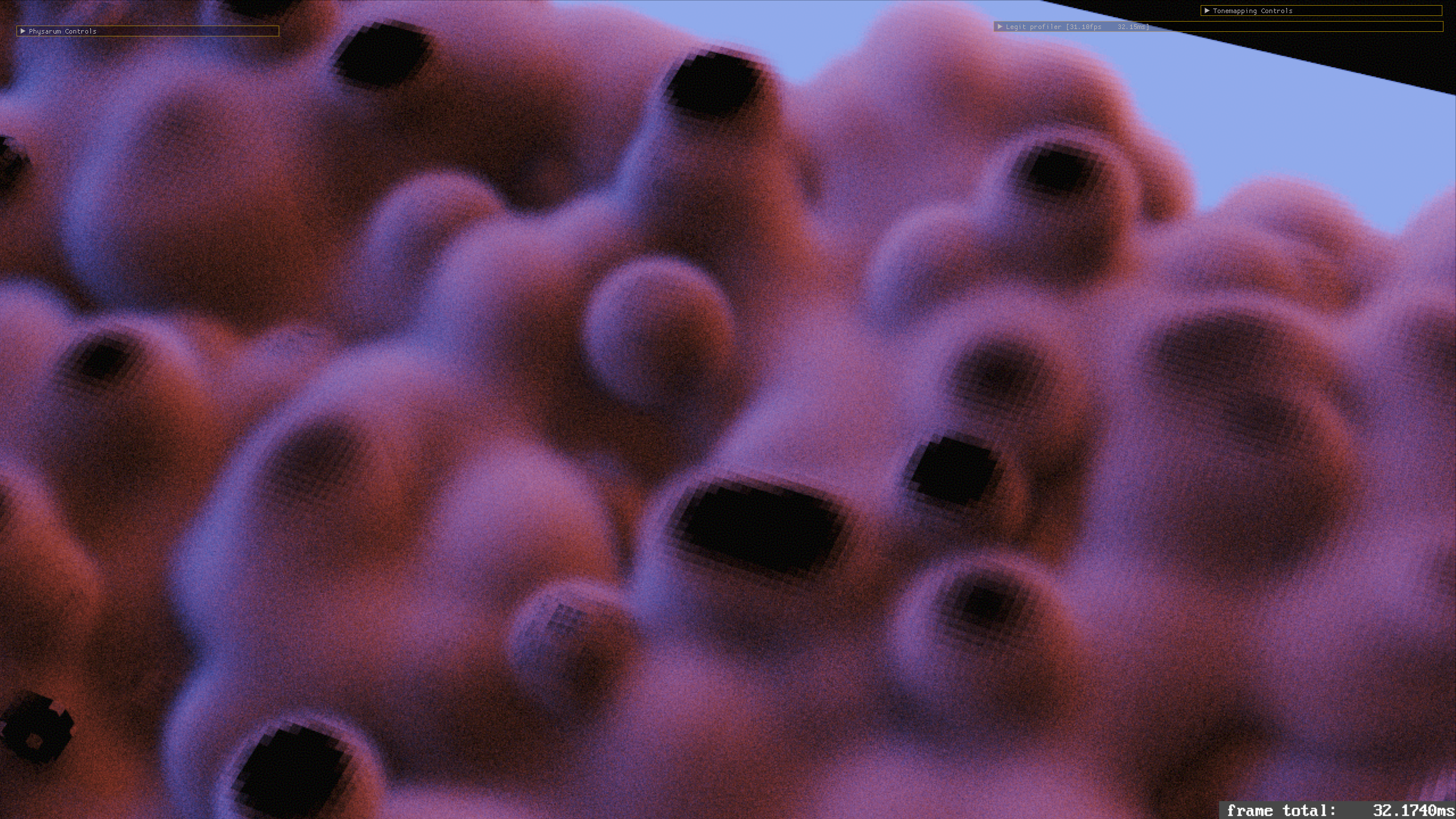

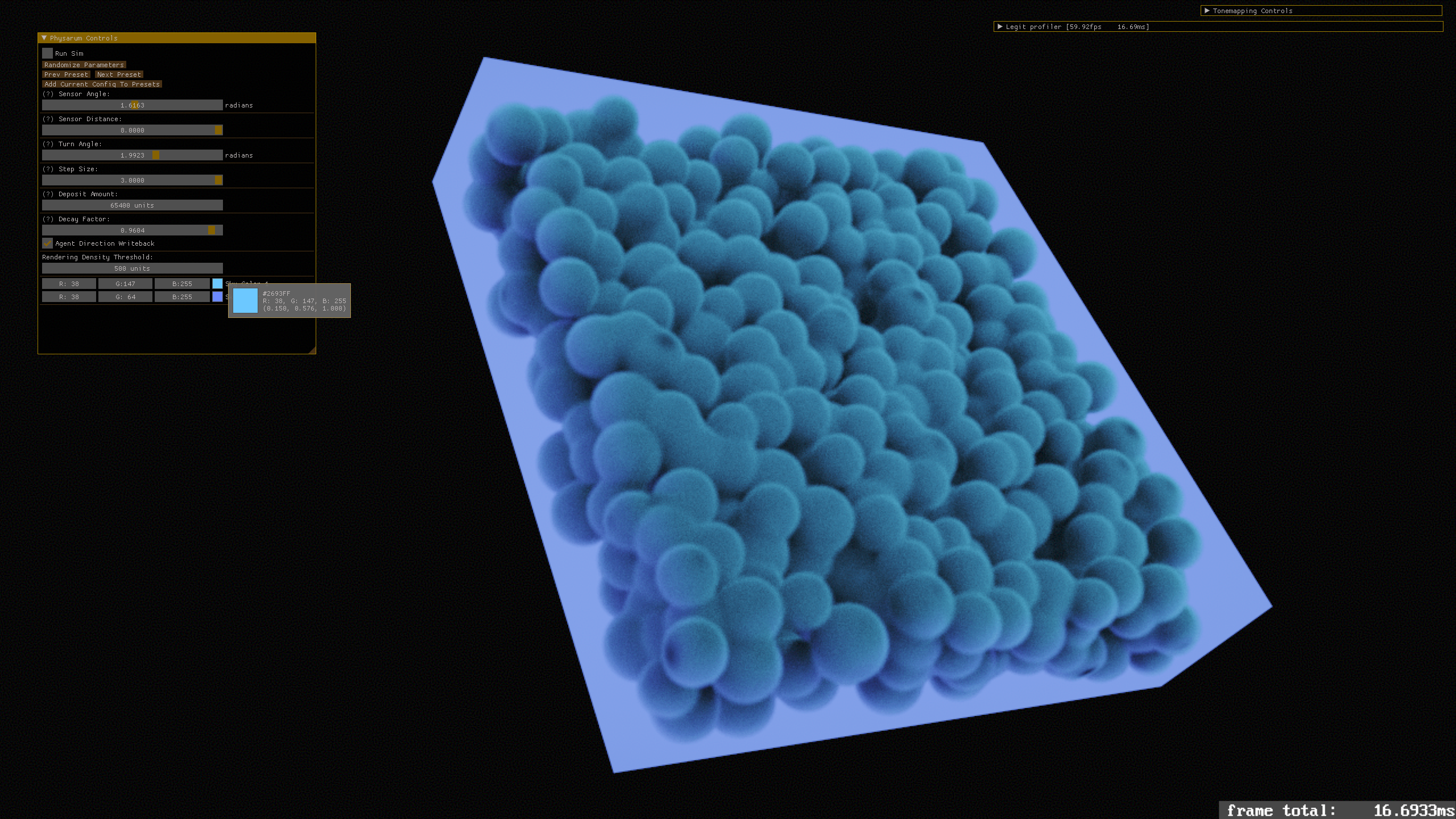

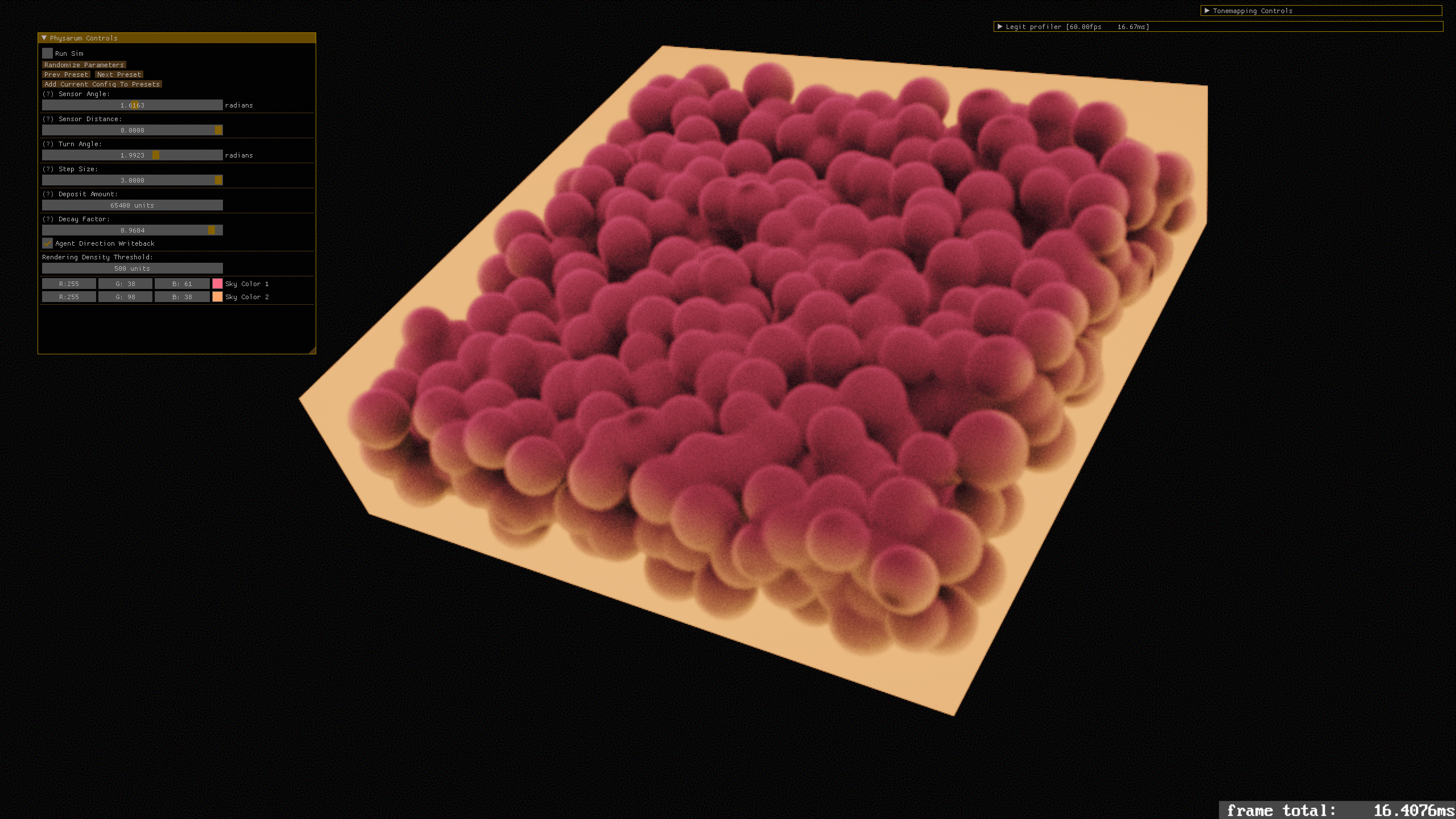

When this scattering event happens, we have some attenuation of the transmission along the ray (correlated with the density at that location), and the ray direction changes. This represents the ray deflecting off of the media in the volume - I have been messing with how to generate this new direction vector. What I started with was just a random unit vector, equally likely to scatter in all directions. I think this is reasonable. There isn't really a normal, as such, unless you are going to also solve for the gradient of the density texture. Because of this, we can't really very easily treat it like a surface... but we do know the previous ray direction, and can use this to compute a weighted sum: some weight on ray direction plus a random unit vector, and then normalize the result.

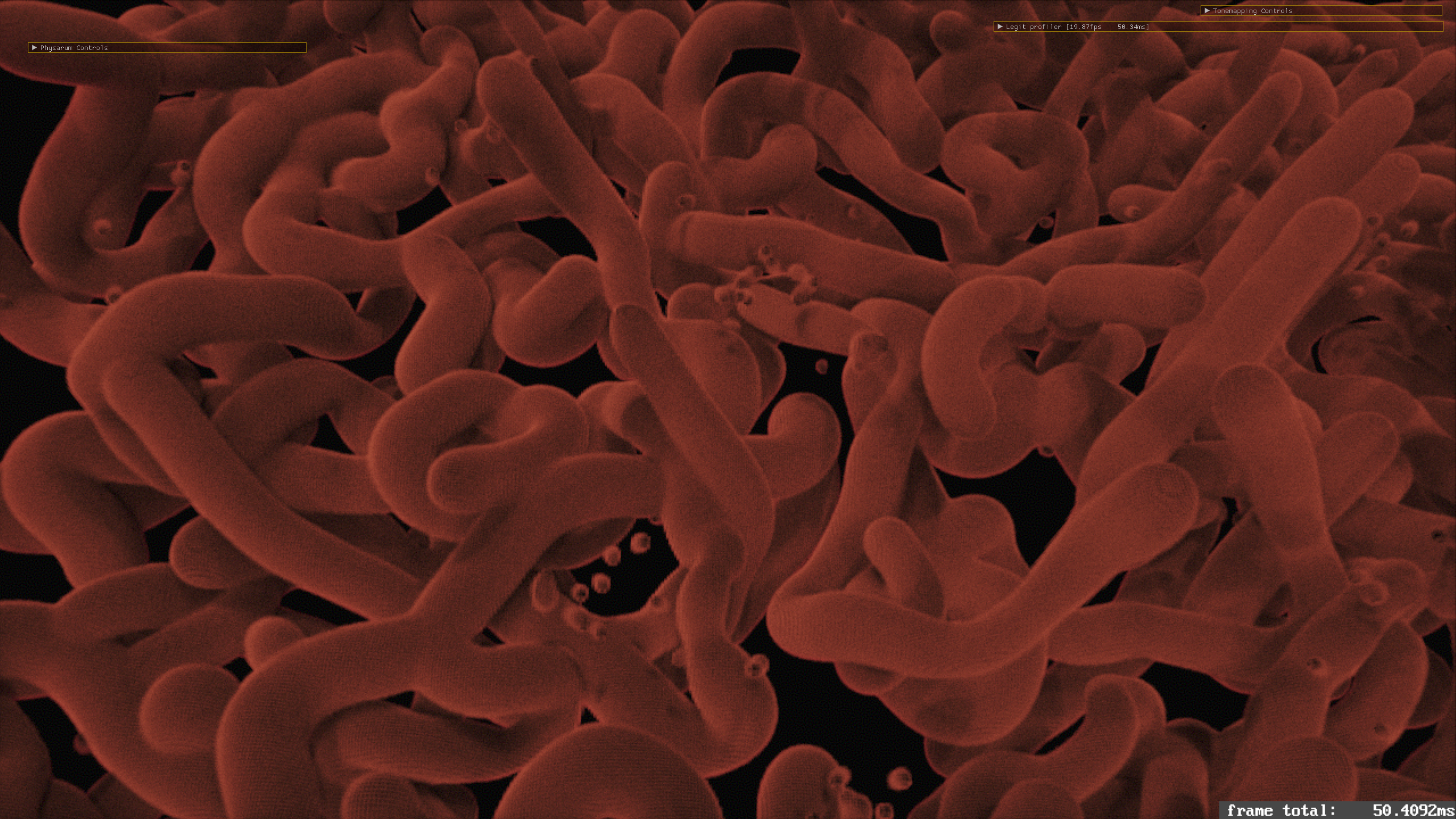

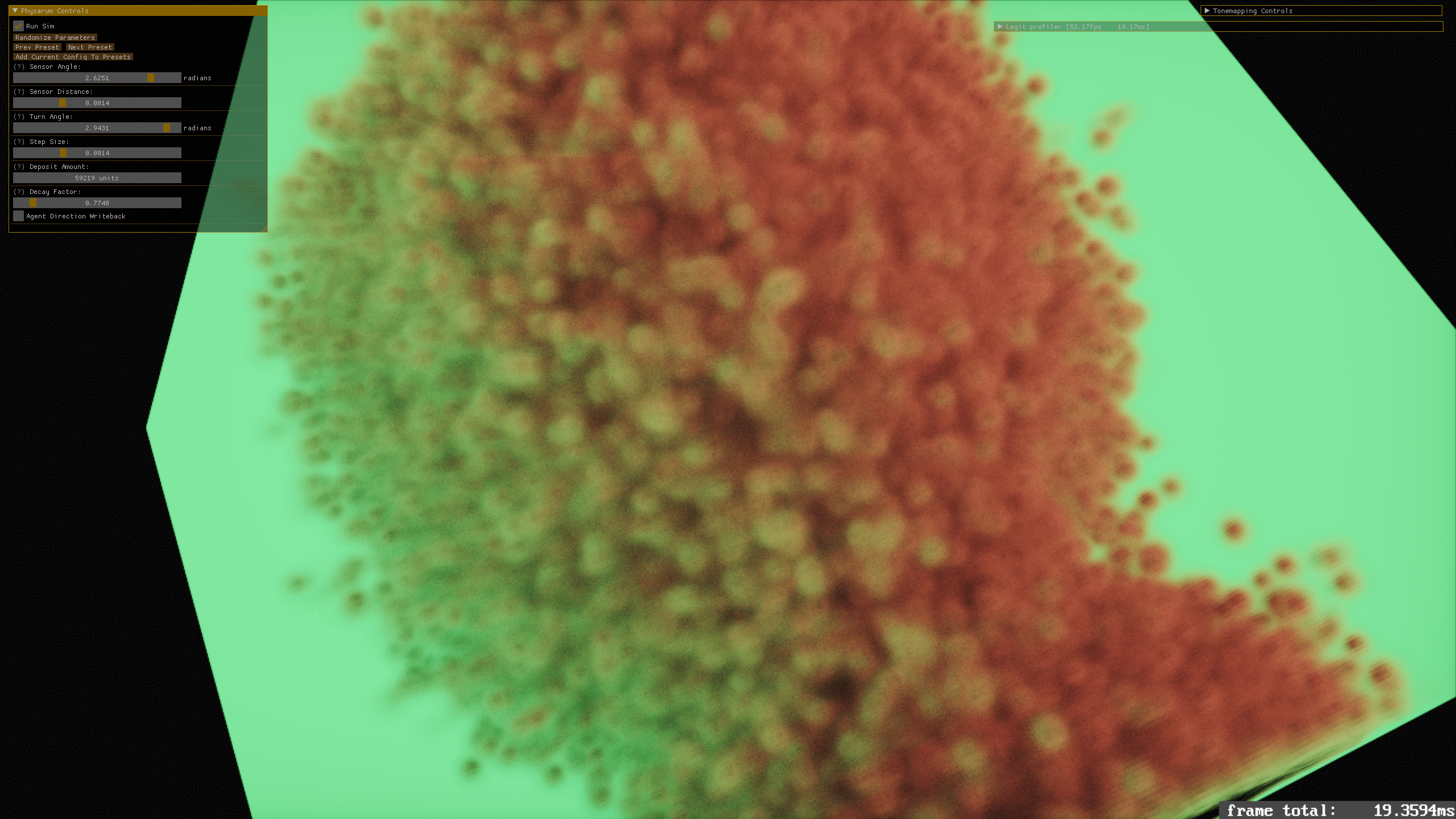

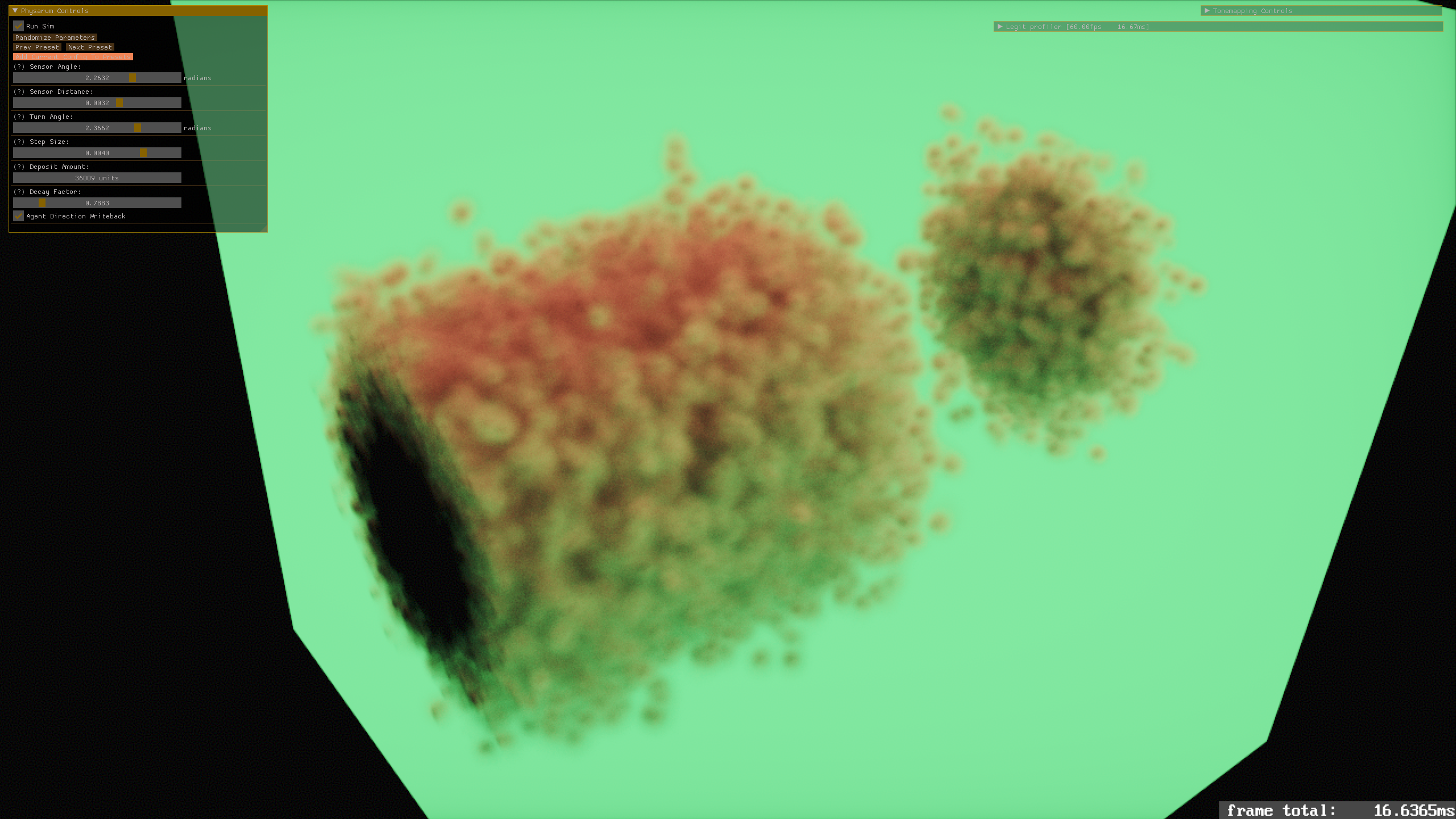

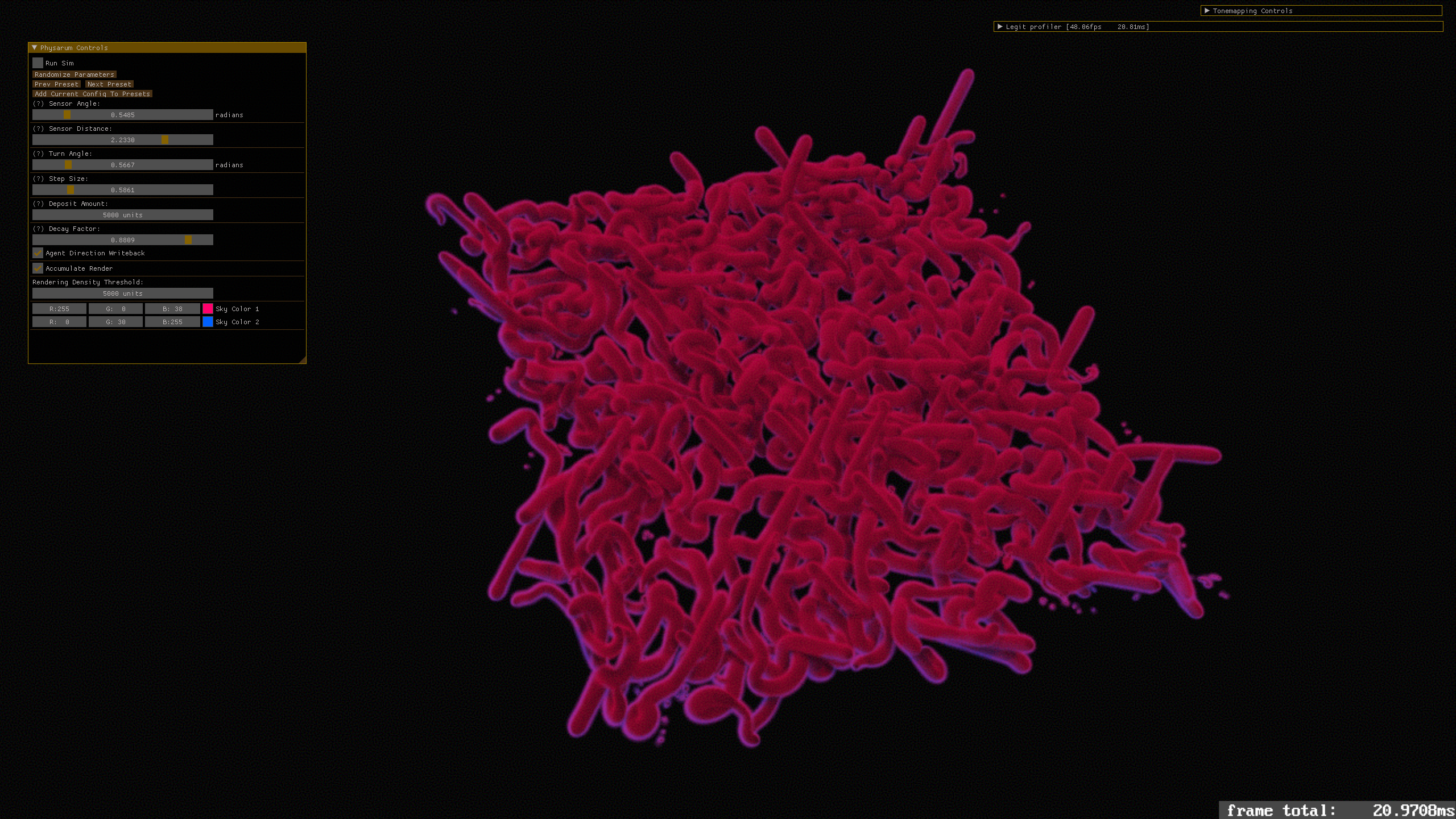

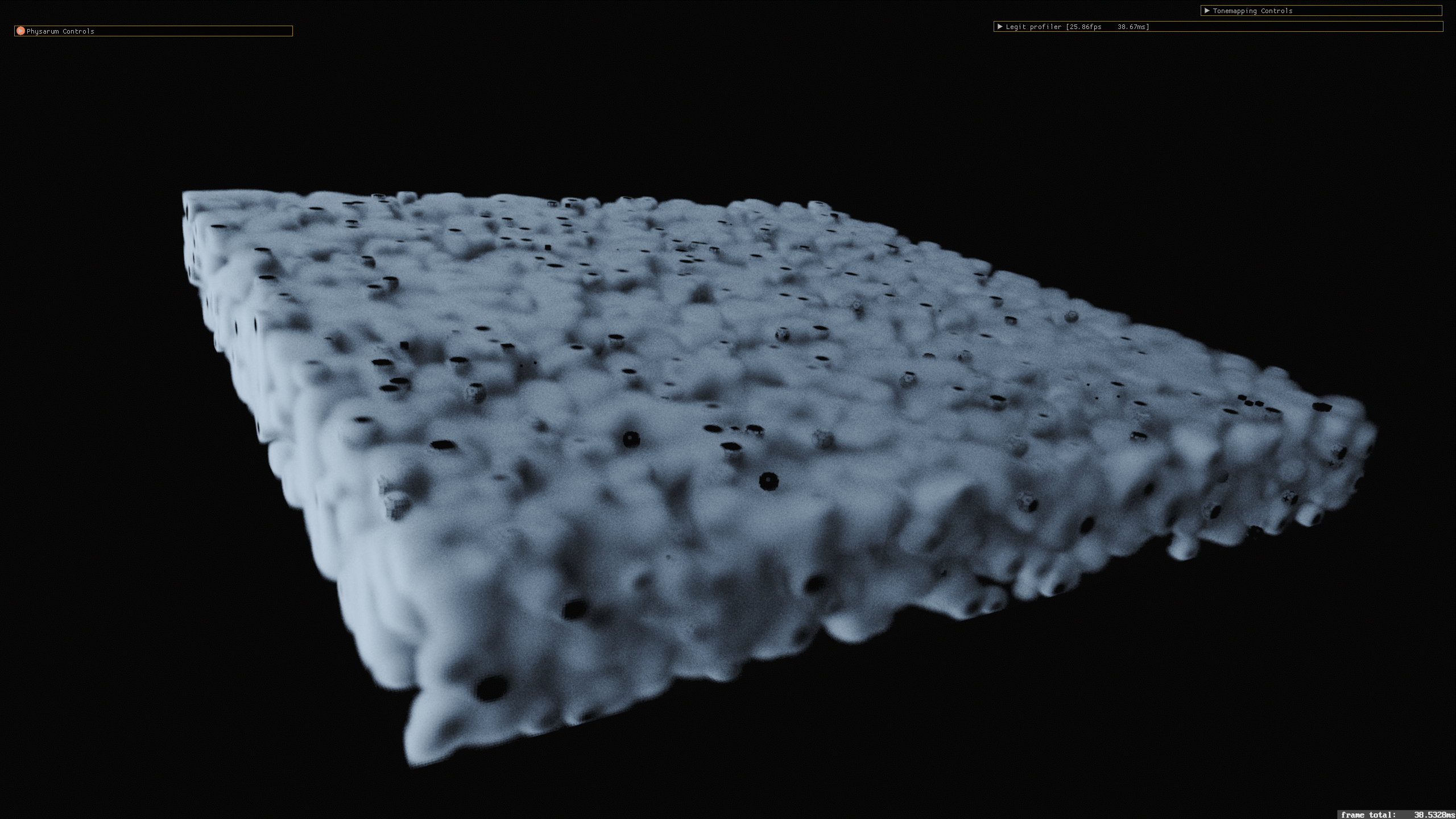

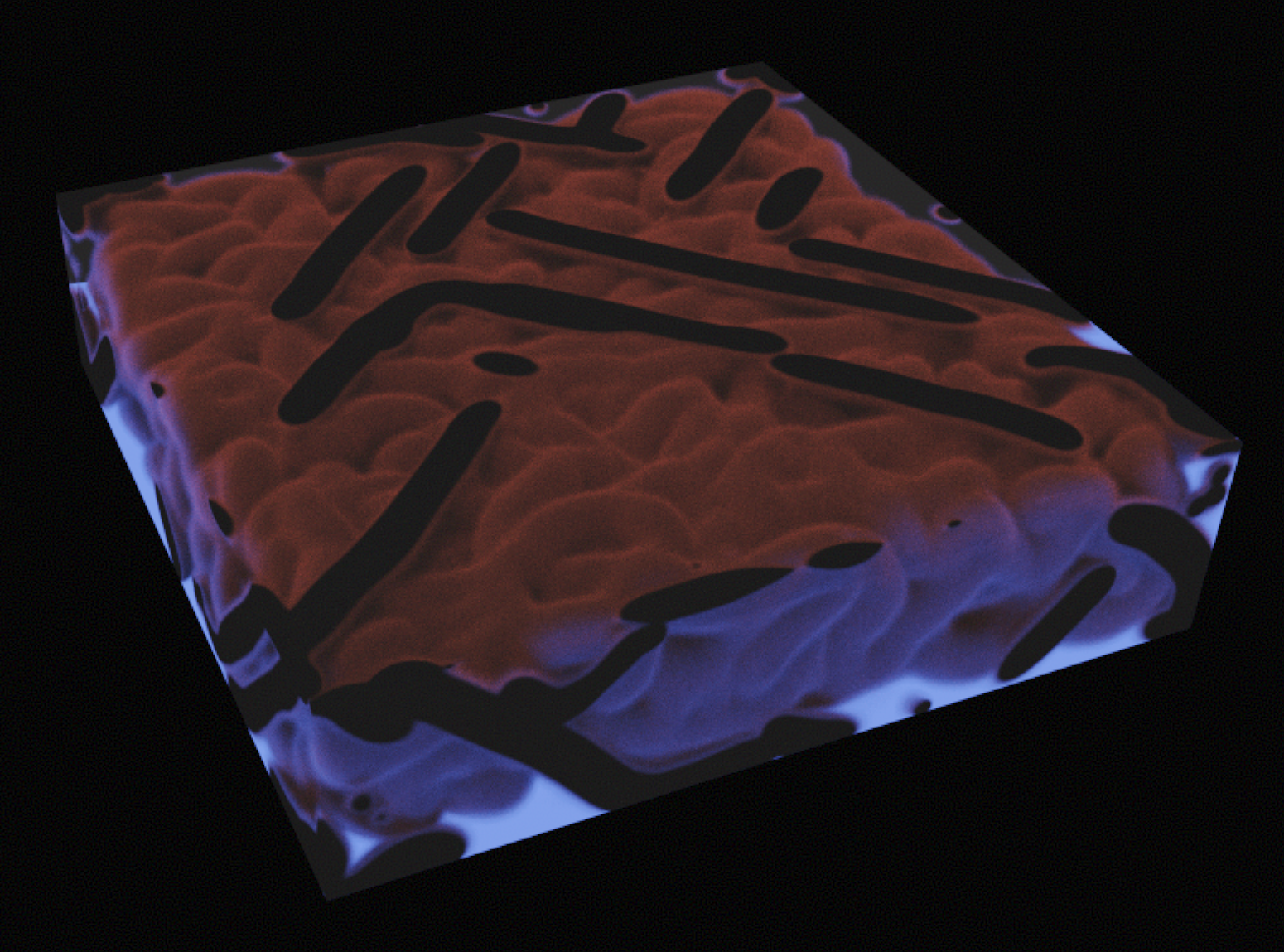

Doing this, you can get a couple of different types of behavior during this scattering. I like how much some of these look like electron microscope images. Using a larger, more positive weight on the previous ray direction, we get something like forward scattering. With a negative weight, we can start to see some kind of diffuse back scattering, where it's going to start treating it maybe a bit like a surface that it's bouncing off. This maps to the notion of a phase function, when you are doing a Nubis-style realtime volumetrics implementation like has become very popular in AAA games lately.

This being a stochastic process, of course there is noise in the output. I was surprised, though, to see how quickly it resolved to a smooth result - you can see a short video showing realtime interaction with the volumetric pathtracing renderer above. You can see the sky lights through the bounding box - that was from before I added a check to see if we had 100% transmission, and using a black background in that case. It supports viewer-in-volume, and I think this is an interesting rendering method to explore further.

Physarum Sim, Extended to 3D

I referred back to the Sage Jenson post for the physarum thing, again. I had to make some changes to logic around the sense taps, because the 2D sim has all three coplanar. In order to move in three dimensions, it's not sufficient to store only a scalar rotation, so I have instead included a 3x3 matrix for orienting each simulation agent. This gives us an orthonormal basis to work with, oriented with the simulation agent, when generating sample tap positions. Instead of there being the single forward sample and the two flanking samples, it's now got a forward sample and three flanking samples. These flanking samples are placed very similarly to the 2D version, but use some 3D offset and rotation logic based on the agent basis and the current sim parameters.

The parameter space I have found to be very finnicky, and I have made some significant-enough changes from the 2D implementation that I did, that I am not able to reuse the presets from that project. I will need to experiment more with finding ranges, but I have found a couple of presets that create some very compelling undulating tendrils. You can see it running in realtime, here - it's noisy when you don't accumulate multiple samples, but it does hold 60fps. You can see this one does have some trails when the camera moves, because it does use a constant blend factor with the previous frame for a bit of noise mitigation.

Future Directions

Both pieces of this project are pretty compelling. The renderer is really interesting, and I think that you could go further with some additional textures containing other material information besides density. If there were some texels which were marked emissive and contained some color information for the emission, that could start getting very interesting. Also, things like reflective surfaces which include normals, might be interesting to consider here, as well.

Wrighter suggested that I try using Beer's law for the logic around the scattering. Currently I'm just doing this linear threshold for the RNG when I'm making the scattering decisions - Beer's law is based on an exponential of this quantity. I think he's correct to suggest doing that - I have not tried it yet, but I do want to.