Physarum Revisit

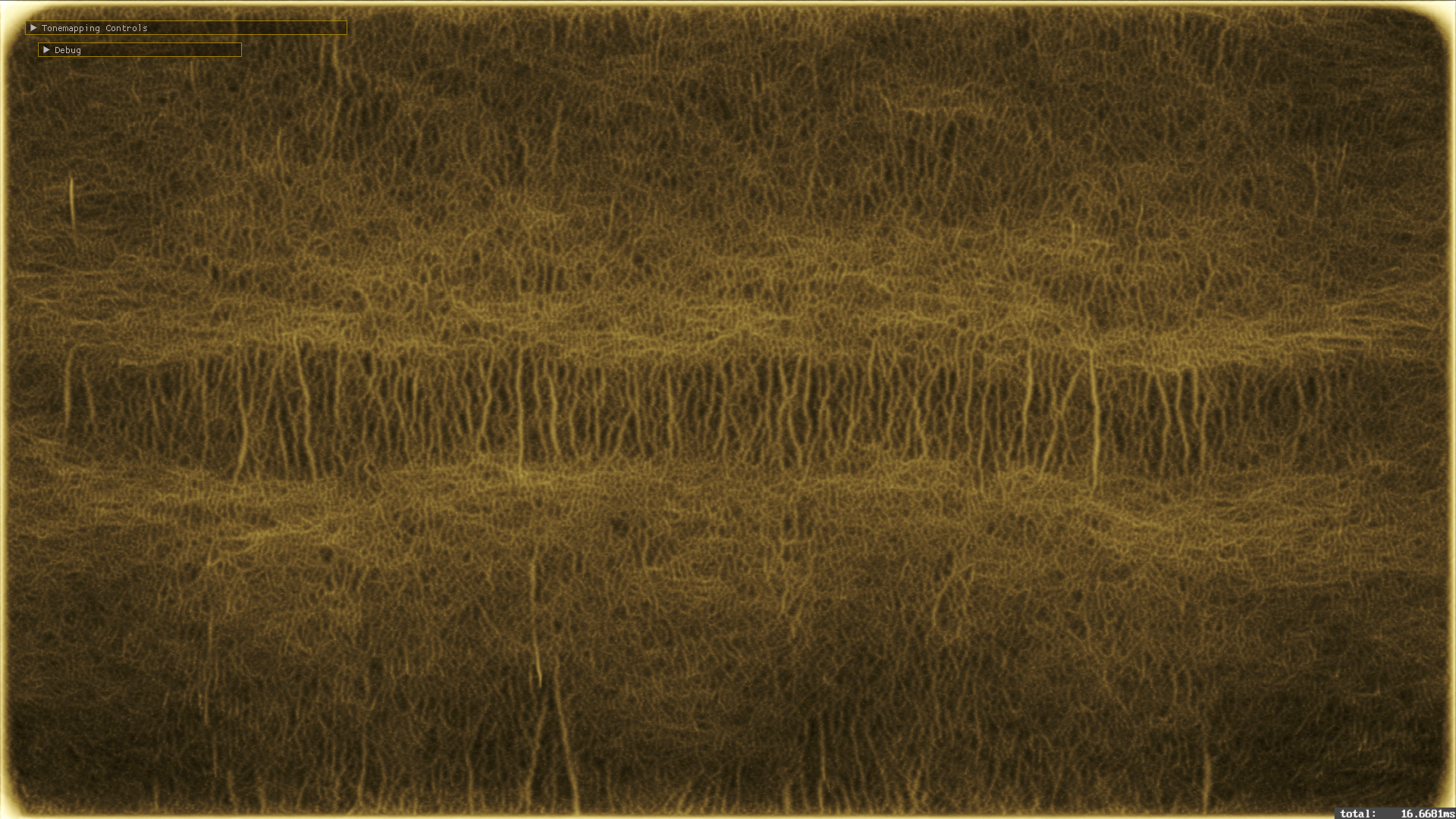

The physarum simulation was something that I had gotten into in 2020, inspired by the work of Sage Jenson. They have been doing more with this concept lately, I have seen on their twitter, extending it to three dimensions and creating these wonderful organic shapes that look like plants and other natural biological, but totally alien structures. I was really impressed, and wanted to get back into messing with this again a little bit, so I ported my old physarum code to jbDE, polished it up a little bit, ended up rewriting almost all of it. Something interesting that I found, I never actually wrote back agent directions – they started with the same base direction before rotation, every update. Fixing this, made most of the interesting patterns collapse. Not sure what happened there at all – worth investigating. I have it on a toggle on the config, now.

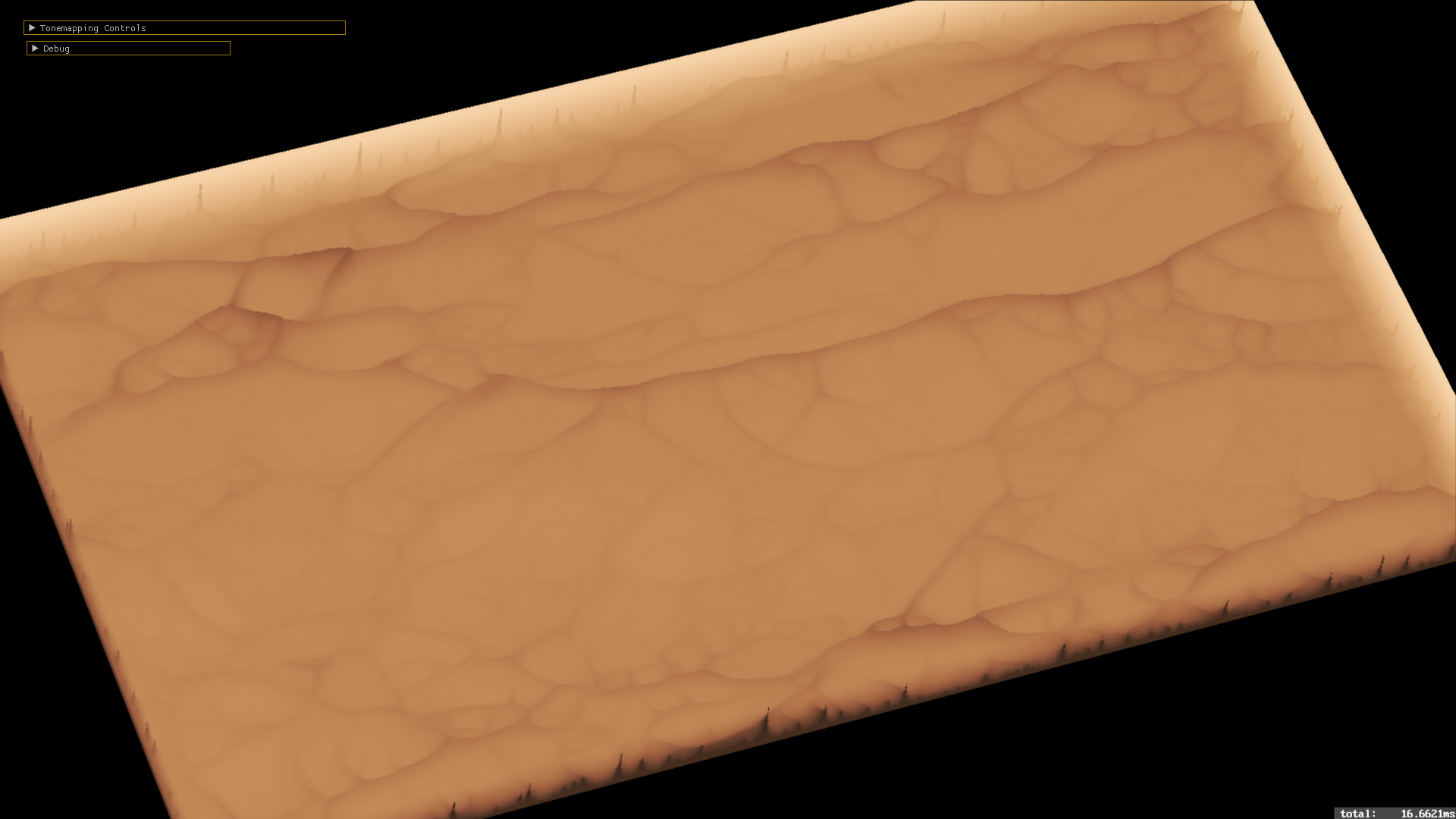

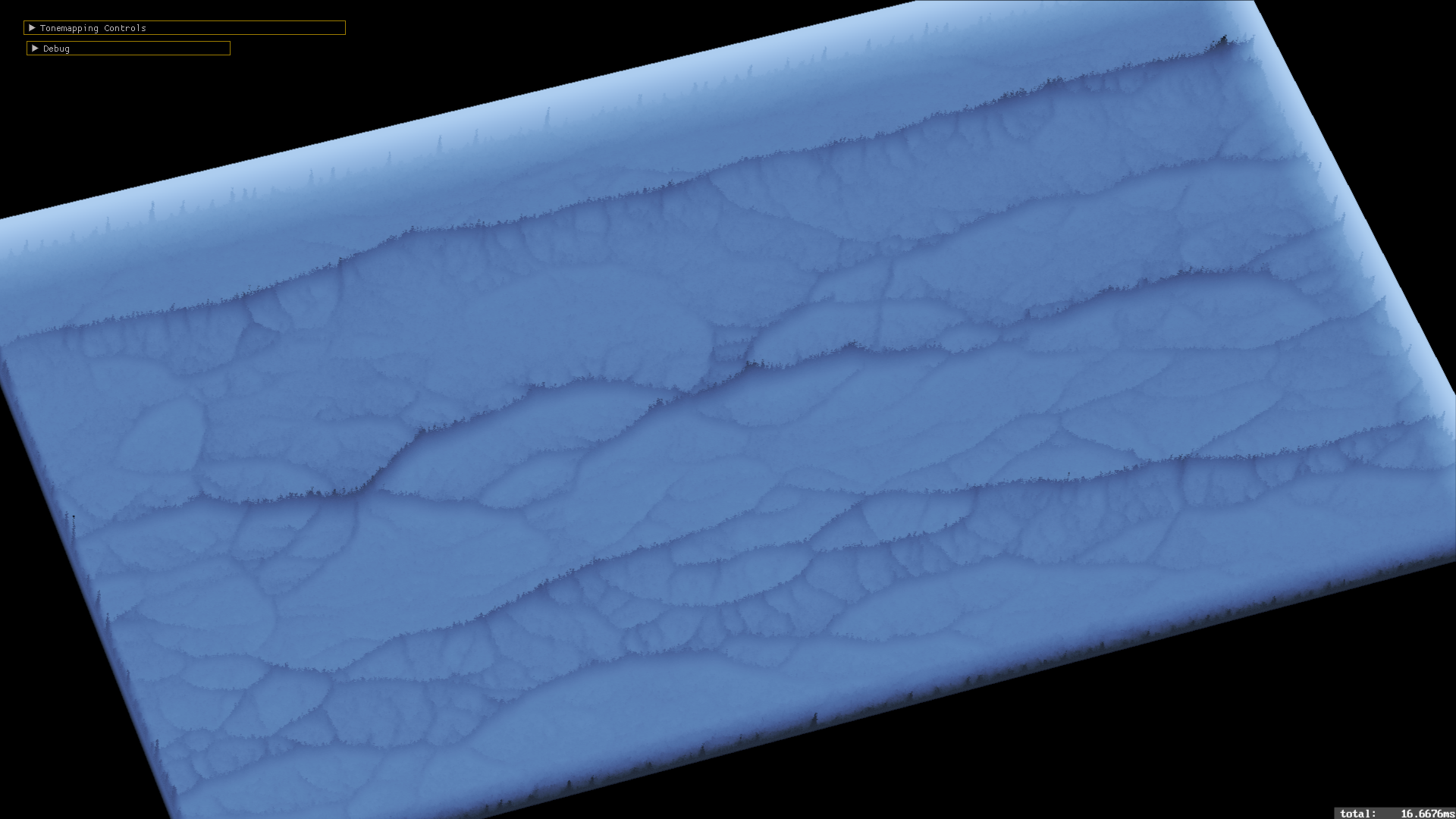

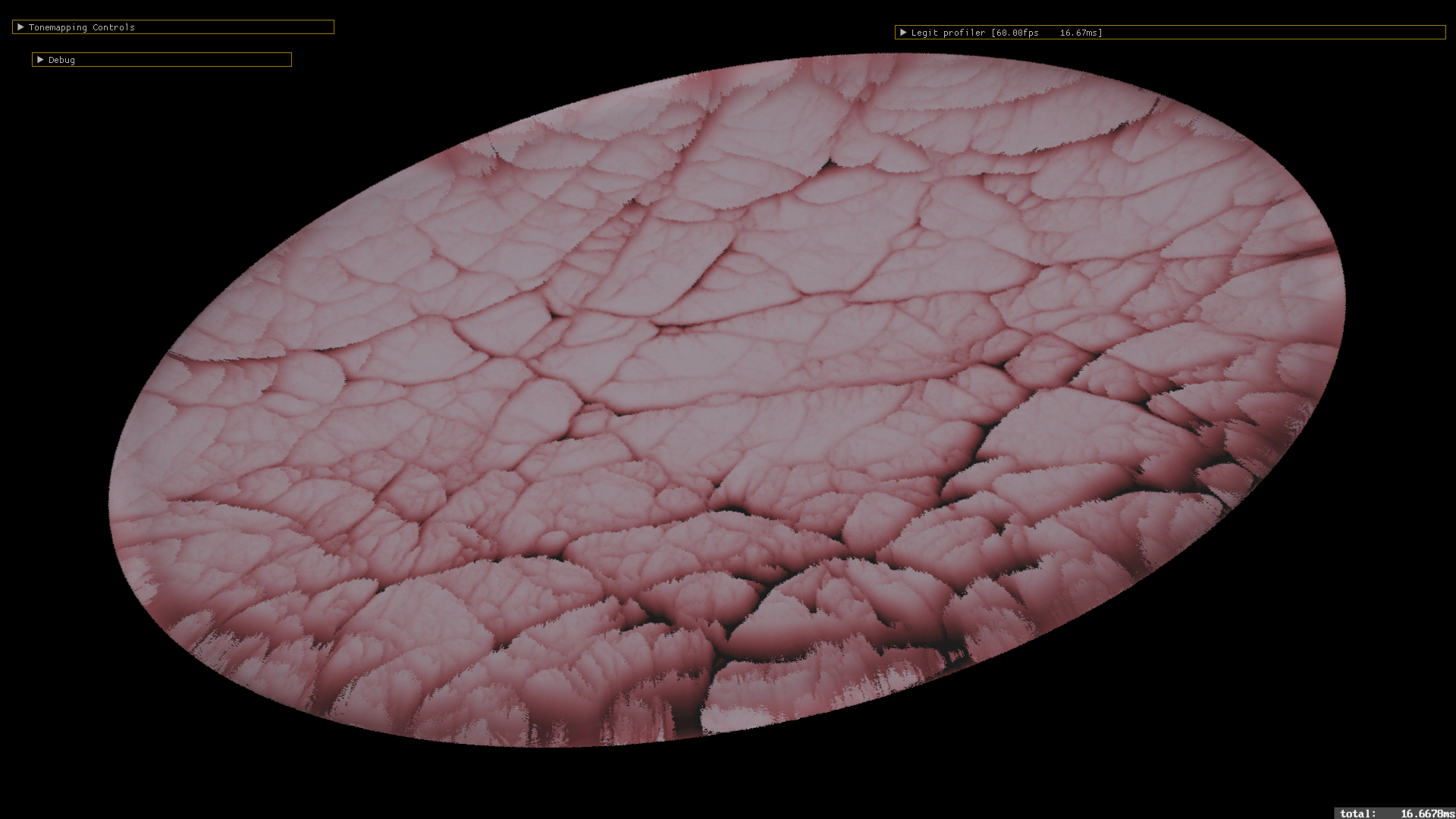

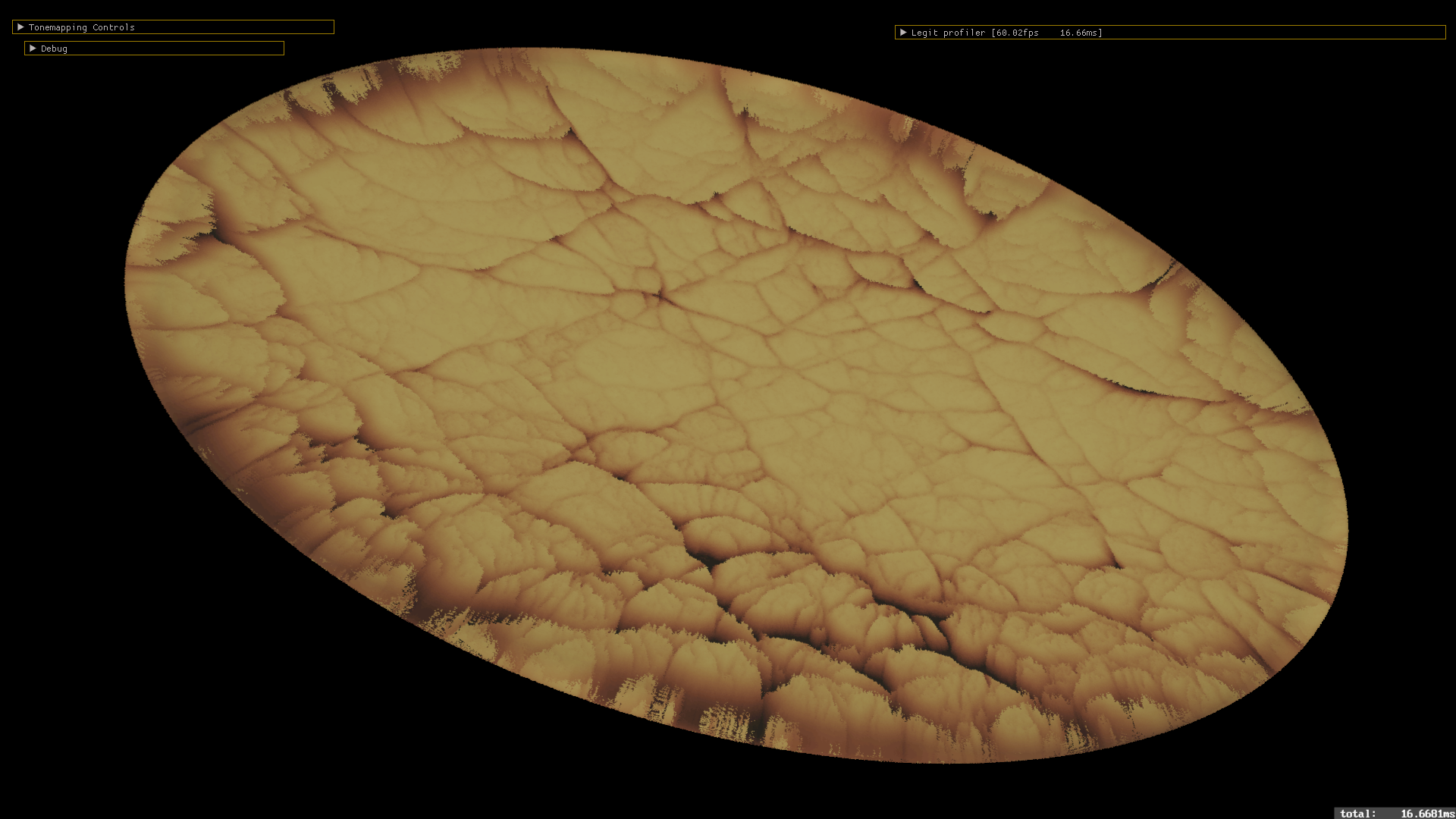

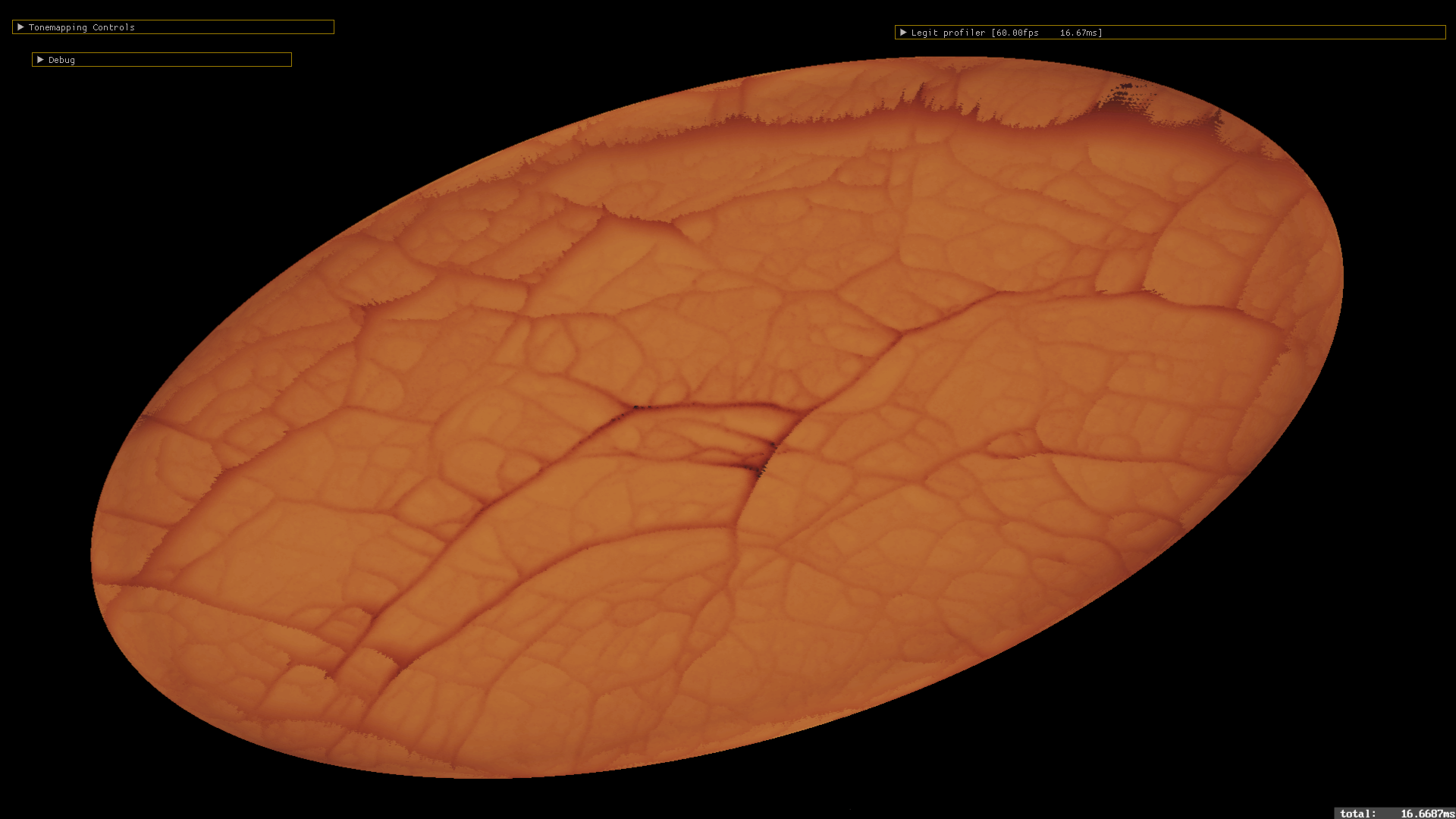

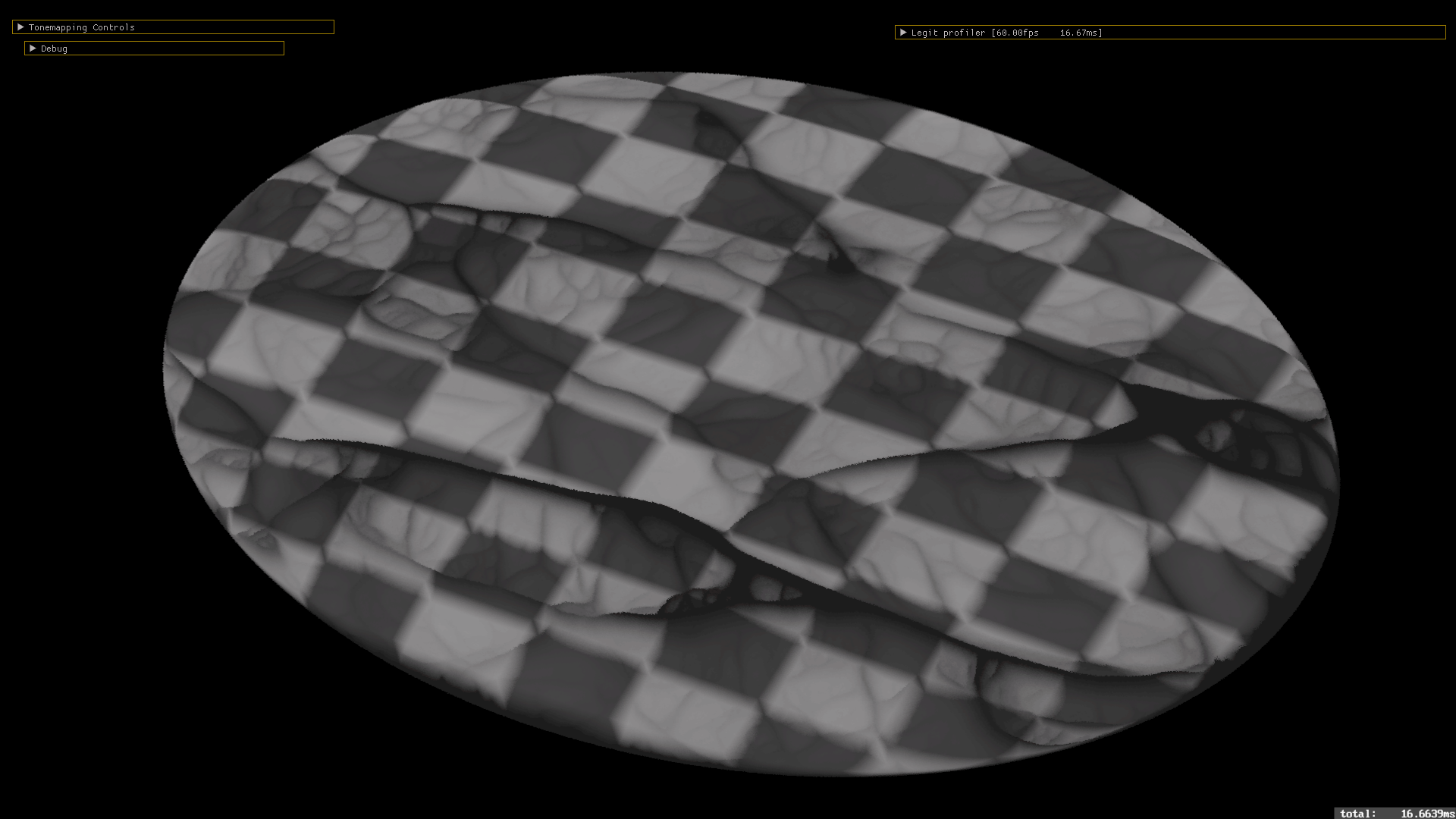

The basic 2d sim can create some very interesting patterns, but visualizing it as a single intensity value on a 2d texture can be a little bit limiting, lacks interest. I had also ported my VoxelSpace algorithm code to jbDE, so I tried that – hooking up the dynamically updating pheromone texture to serve as the heightmap for the rendering, the same way I had the dynamically updated buffer for the progressively updated hydraulic erosion simulation. It came out kind of interesting, but the field of view was a little bit constricting, didn’t feel like you could see much of the pattern at a time. Wanted to try other methods of visualization.

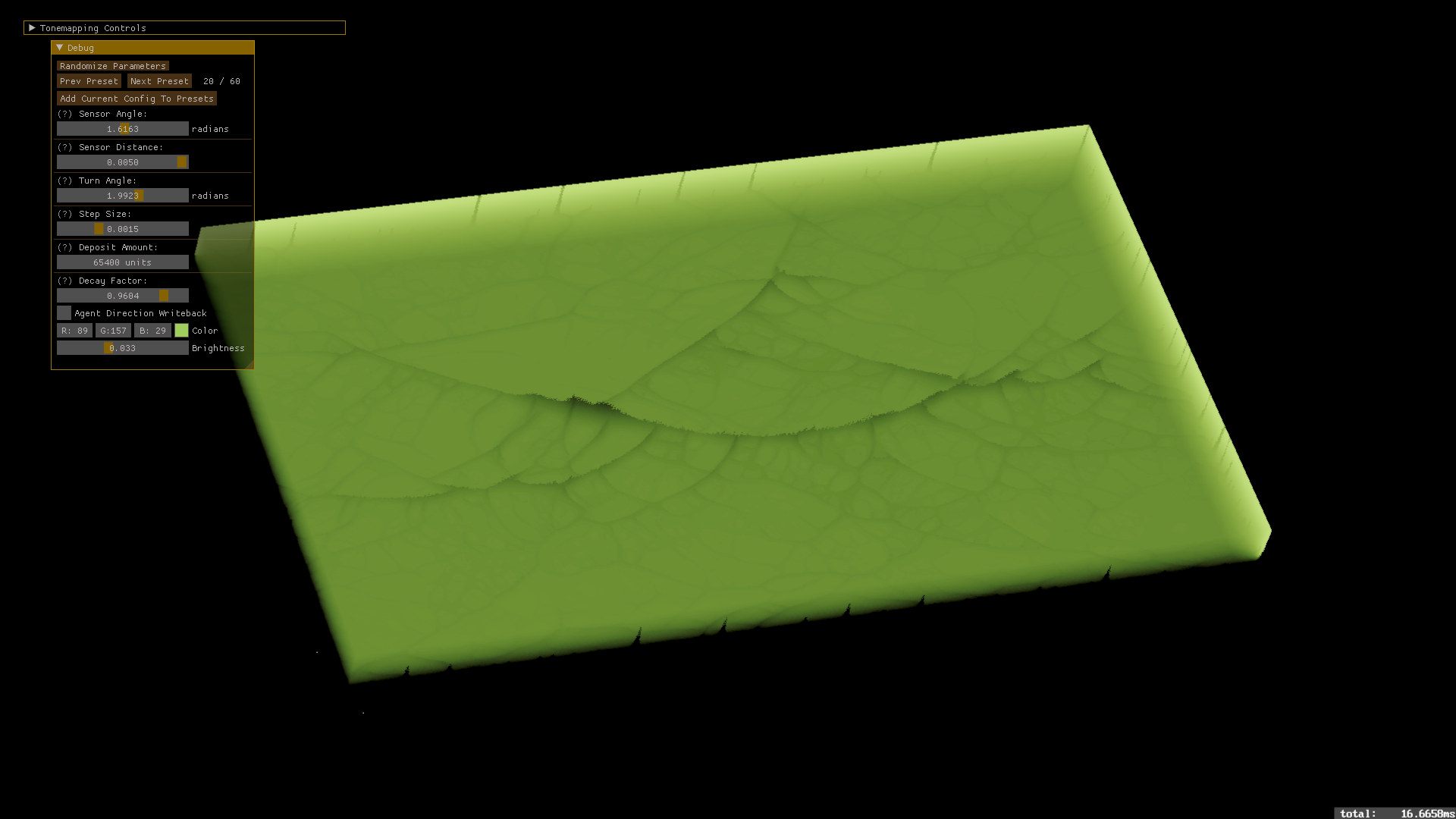

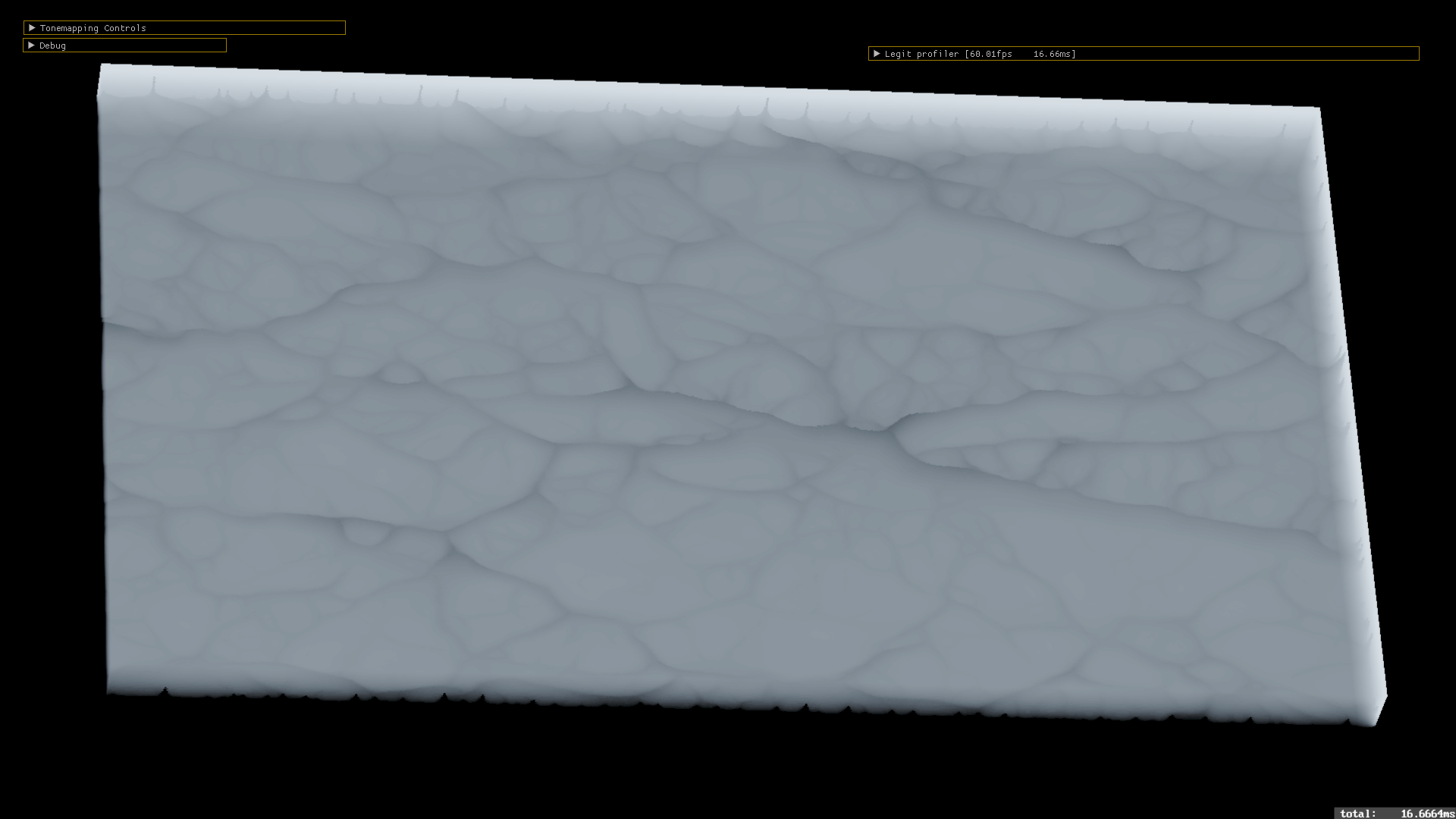

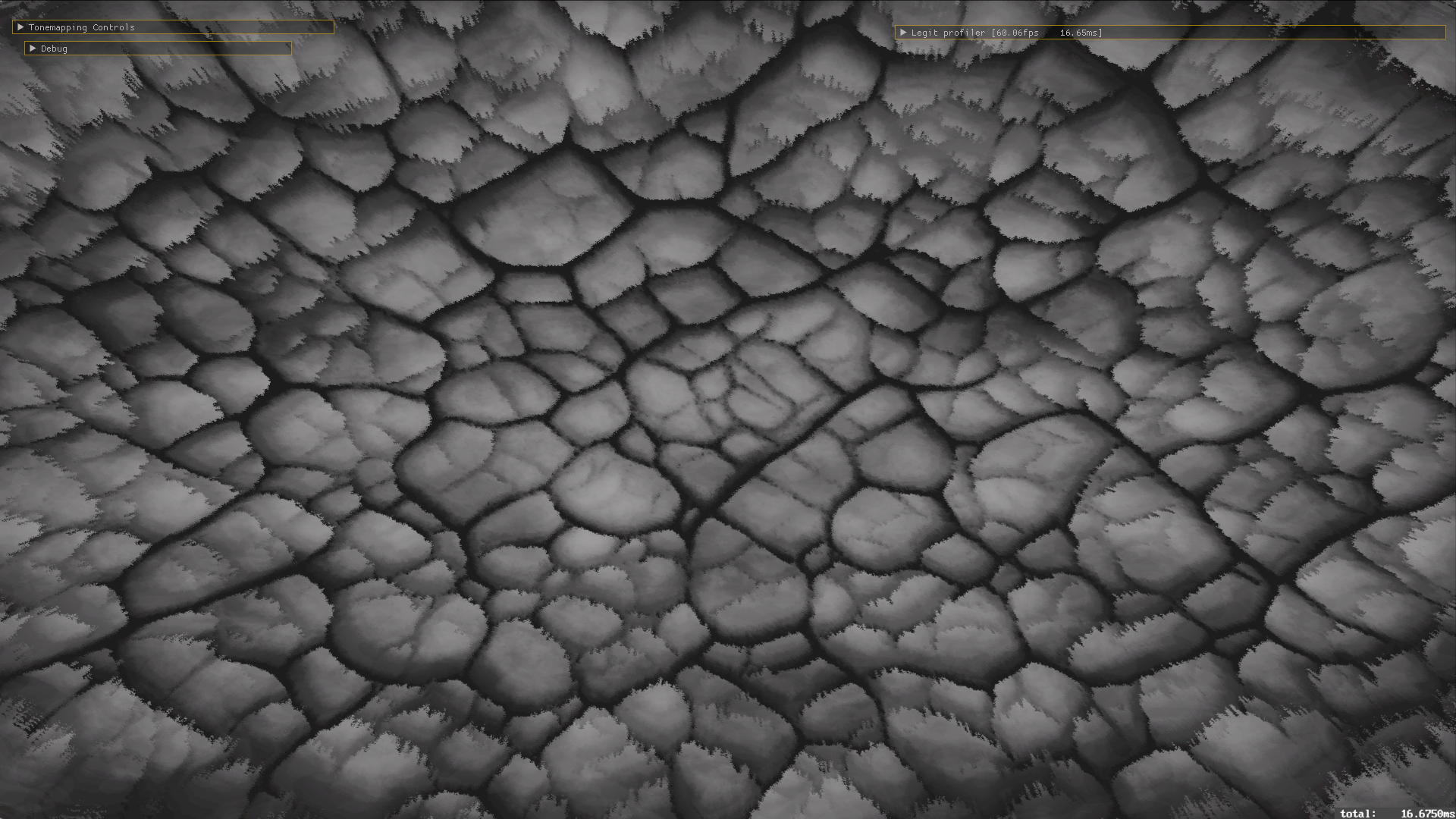

So, I got started on the next iteration – I wanted to do something with volumes, in the more conventional forward Beer’s law based approach that is used in a lot of recent realtime implementations because of some stuff we have covered this year at GPVM. It starts with a ray-AABB test, like Voraldo, but then the approaches start to be different. I’m using a closest hit forward approach, rather than farthest hit backwards, as with Voraldo’s custom alpha blended samples. They both use constant step size traversal, which is a limitation - it creates significant aliasing, it can miss small features and create other artifacts. You can see what this looks like in the grey image. This is remedied with the DDA traversal used by the evolution of this renderer, which I will talk about in the article about what this eventually turned into, Aquaria.

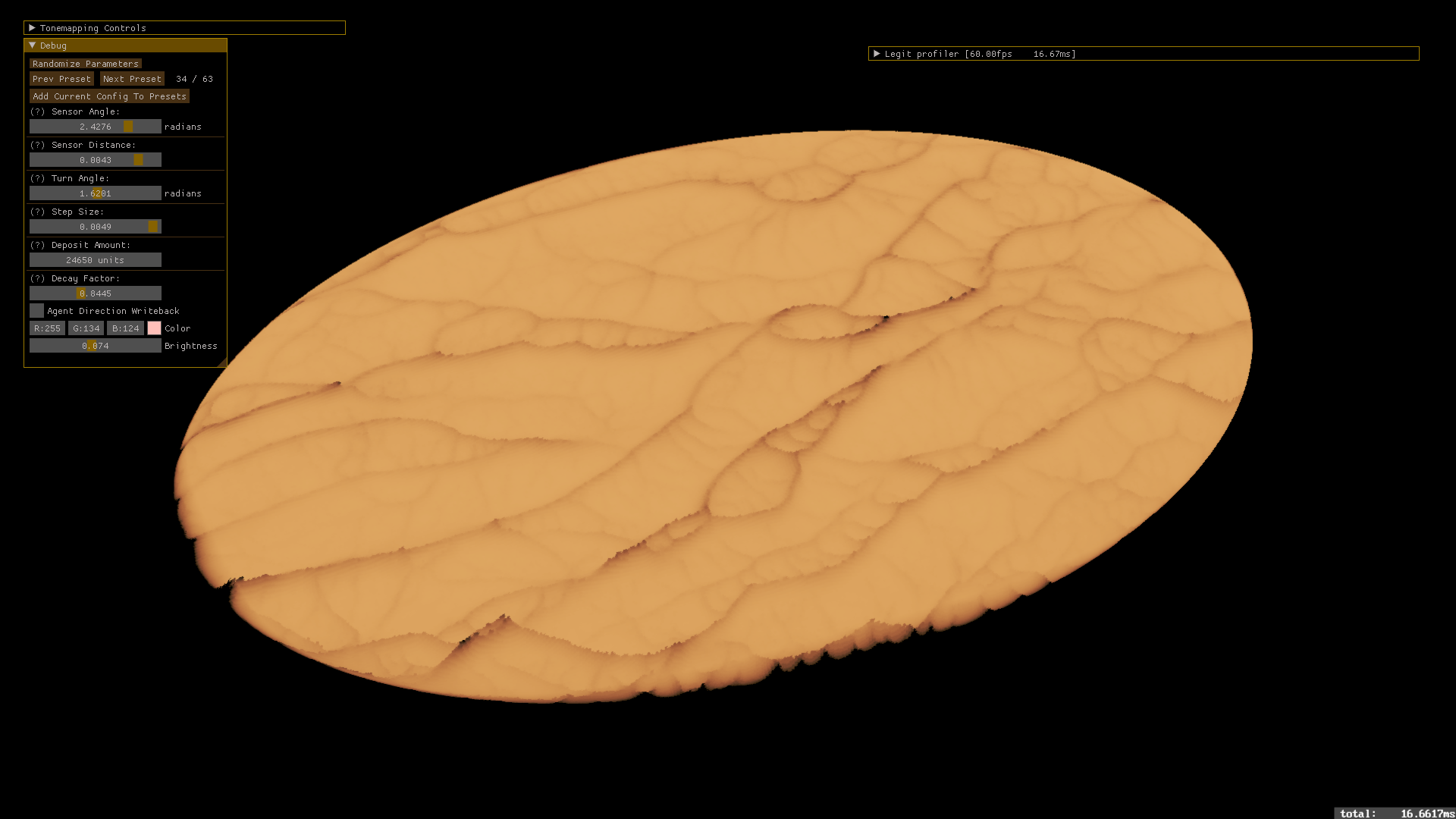

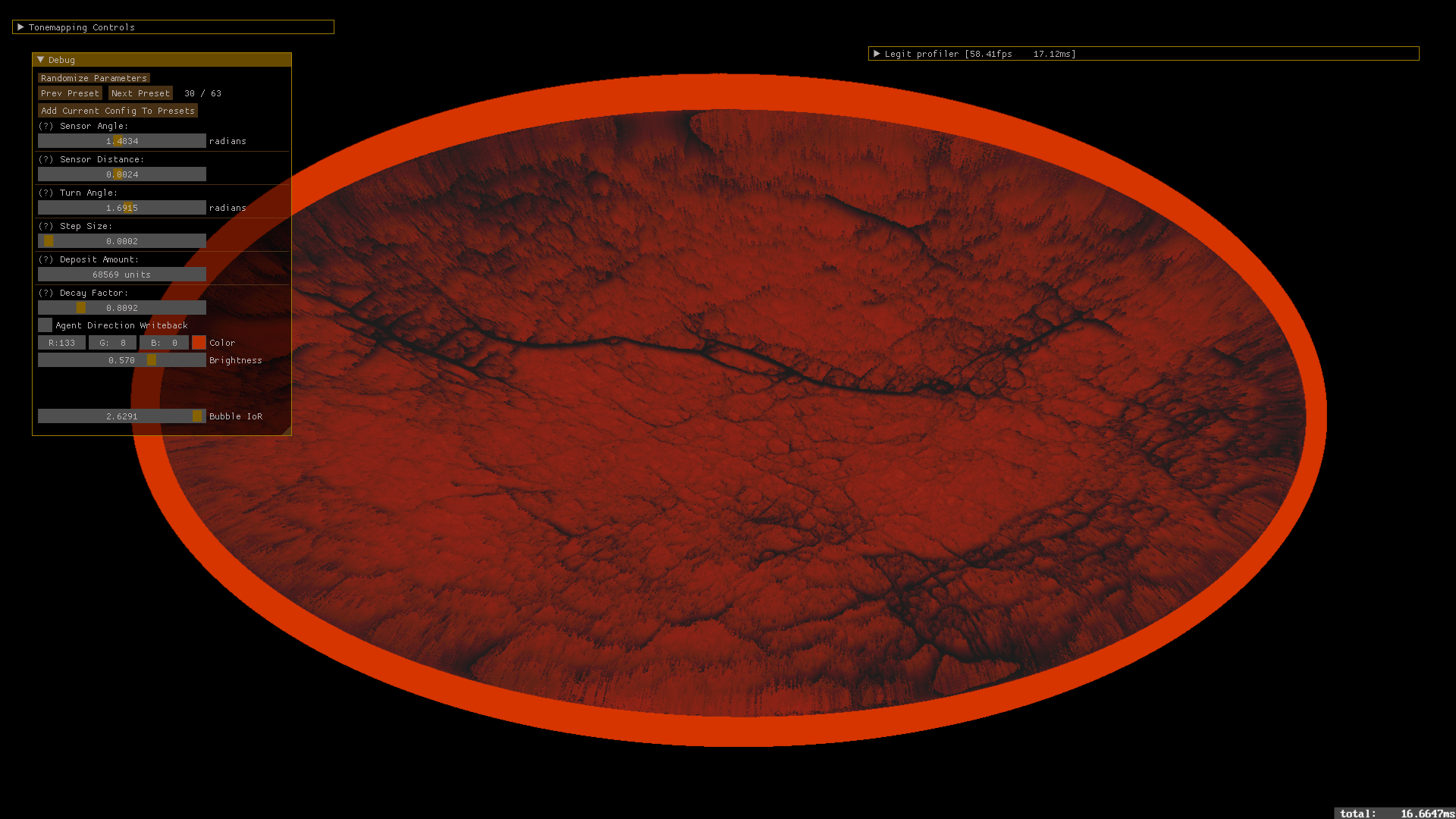

Once this constant step size traversal finds a region of the heightmap that we want to consider the surface, we color it black, and add exp(-distance traveled) times some color, to model absorption via Beer’s law. I thought it was a clever way to use the same data, I just sampled the physarum’s simulation buffer, computed a value 0-1 and thresholded that against the sample point’s z. This way, I was able to directly go from heightmap to this 3d visualization, with very little bandwidth used during the raymarch. There aren't any shadows computed here, all the color comes from this exponential depth term.

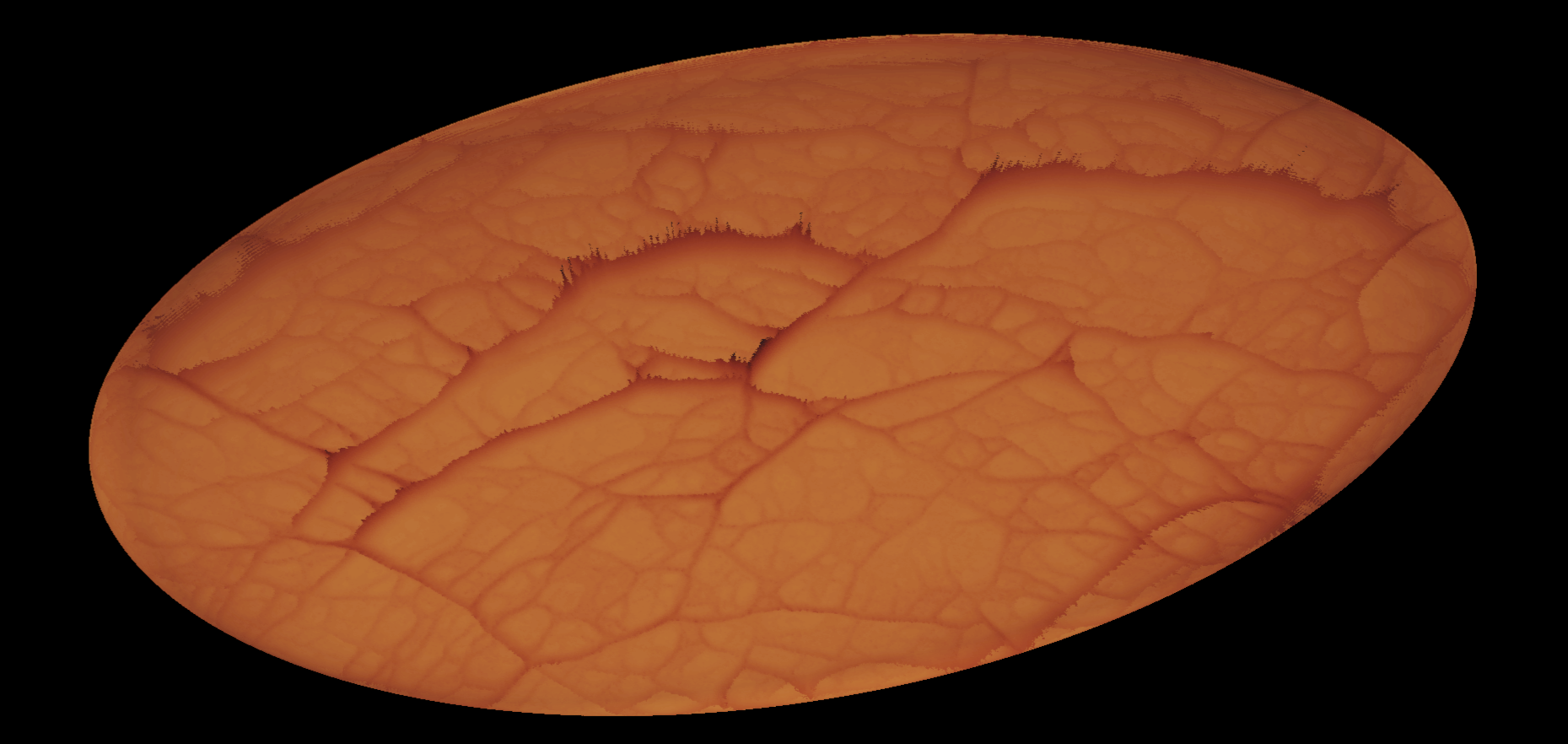

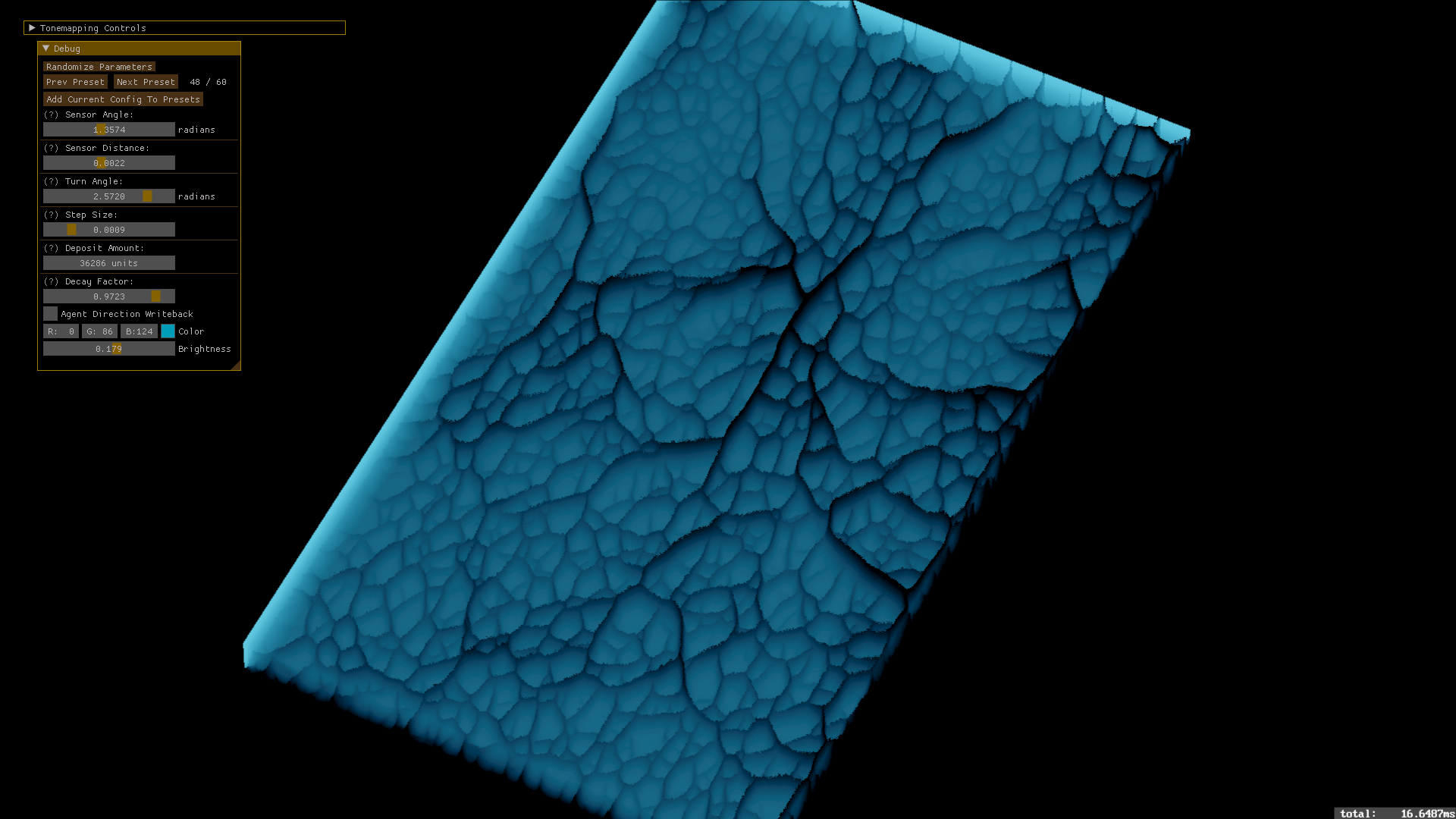

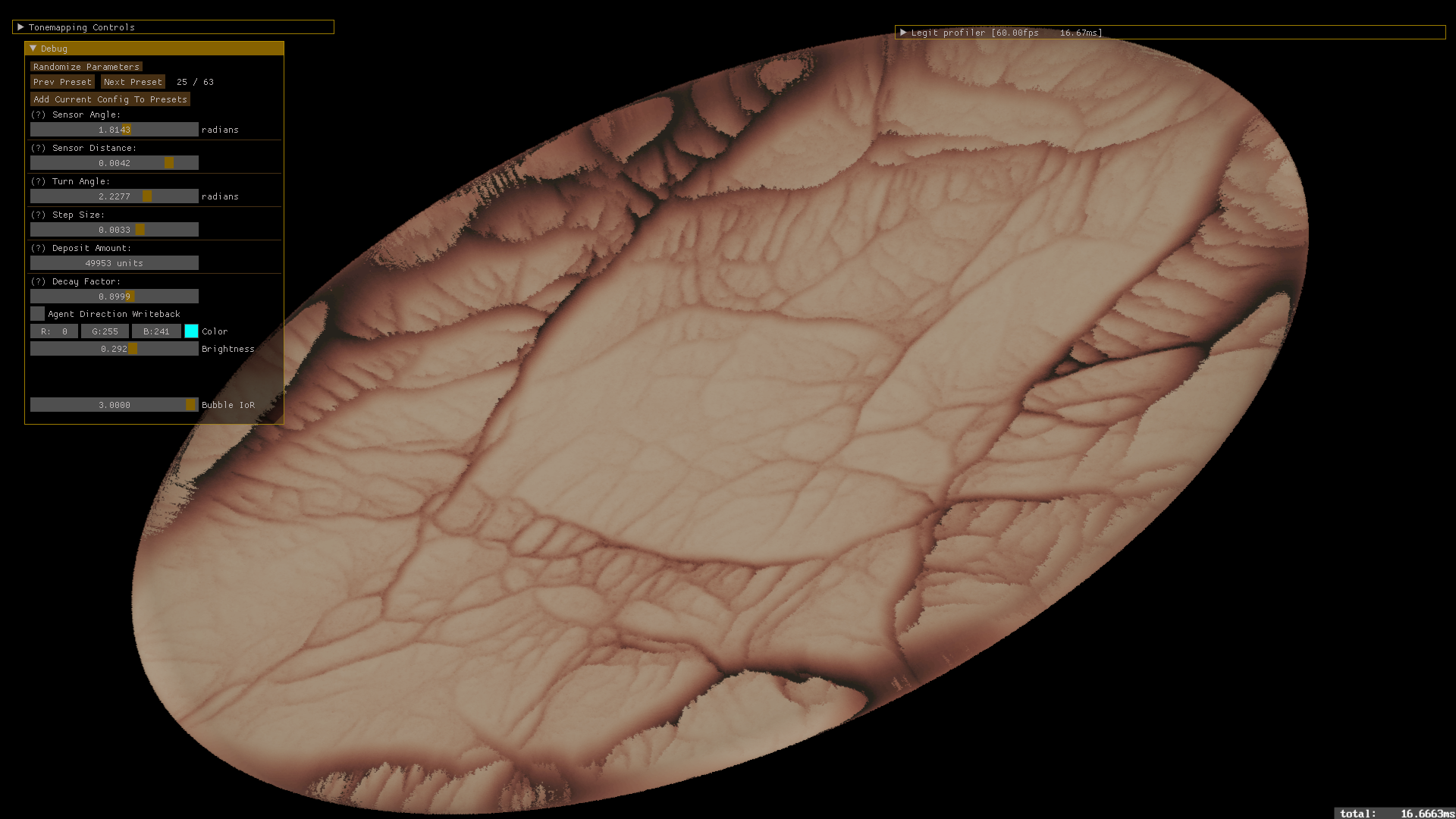

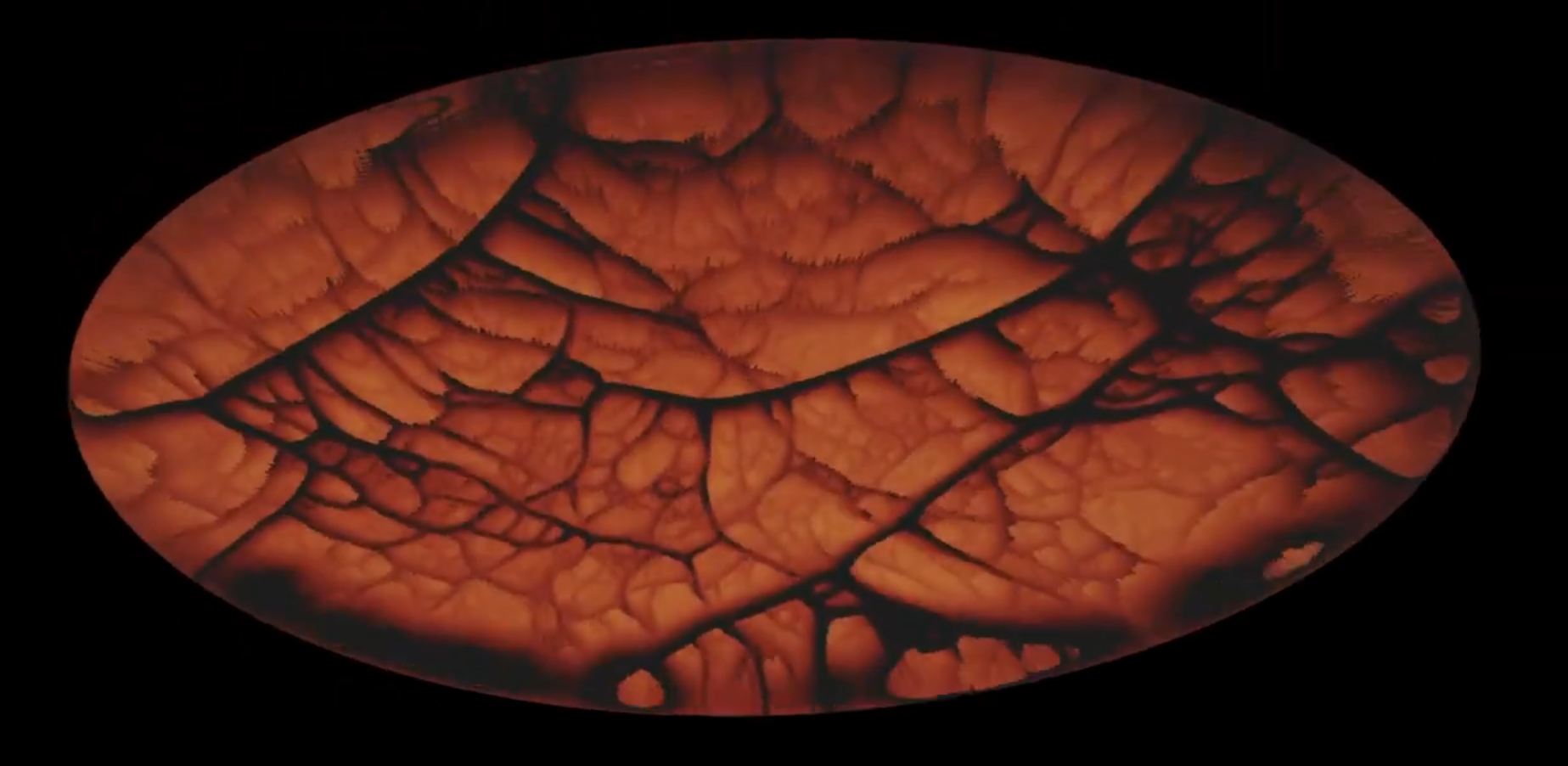

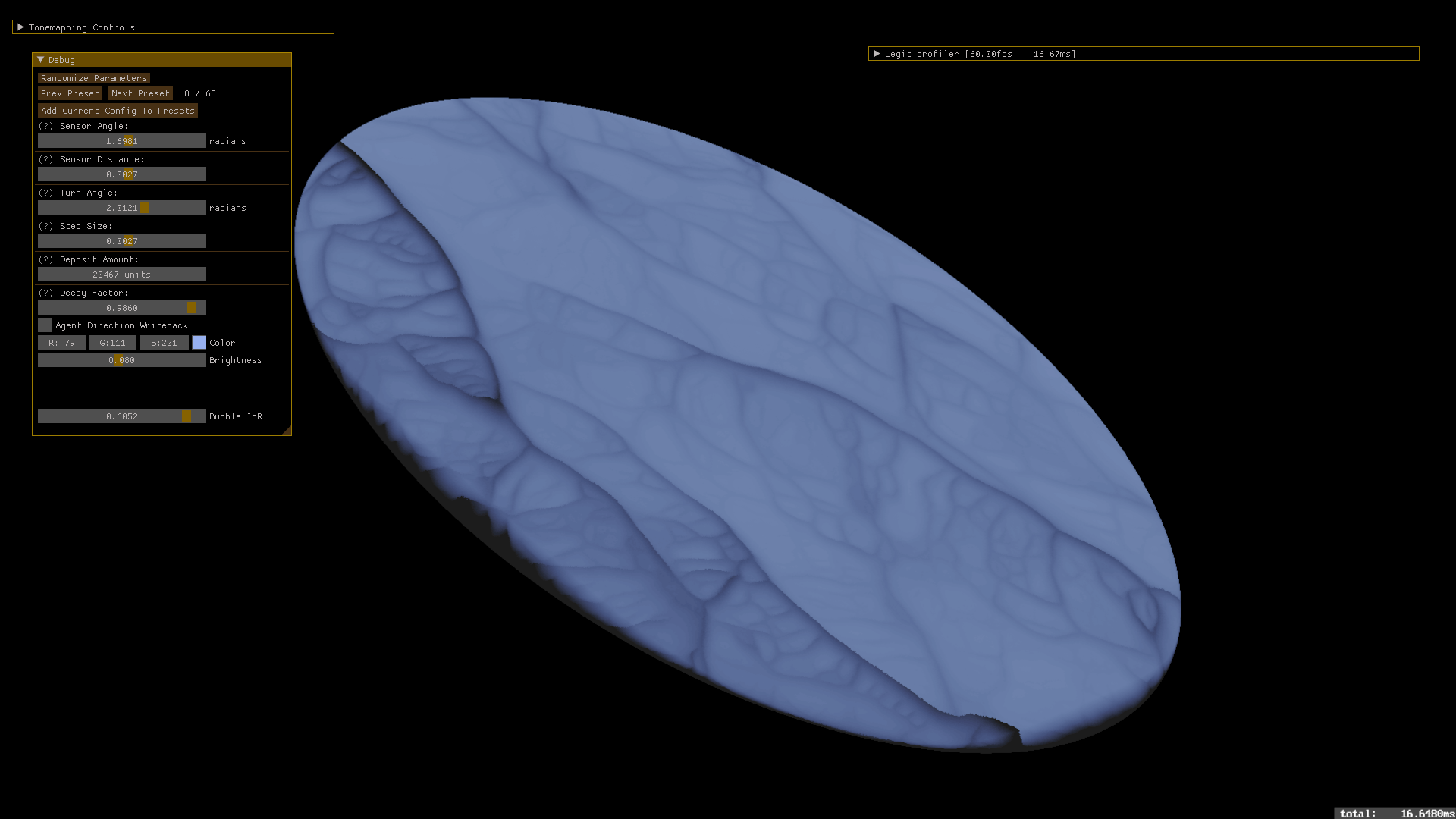

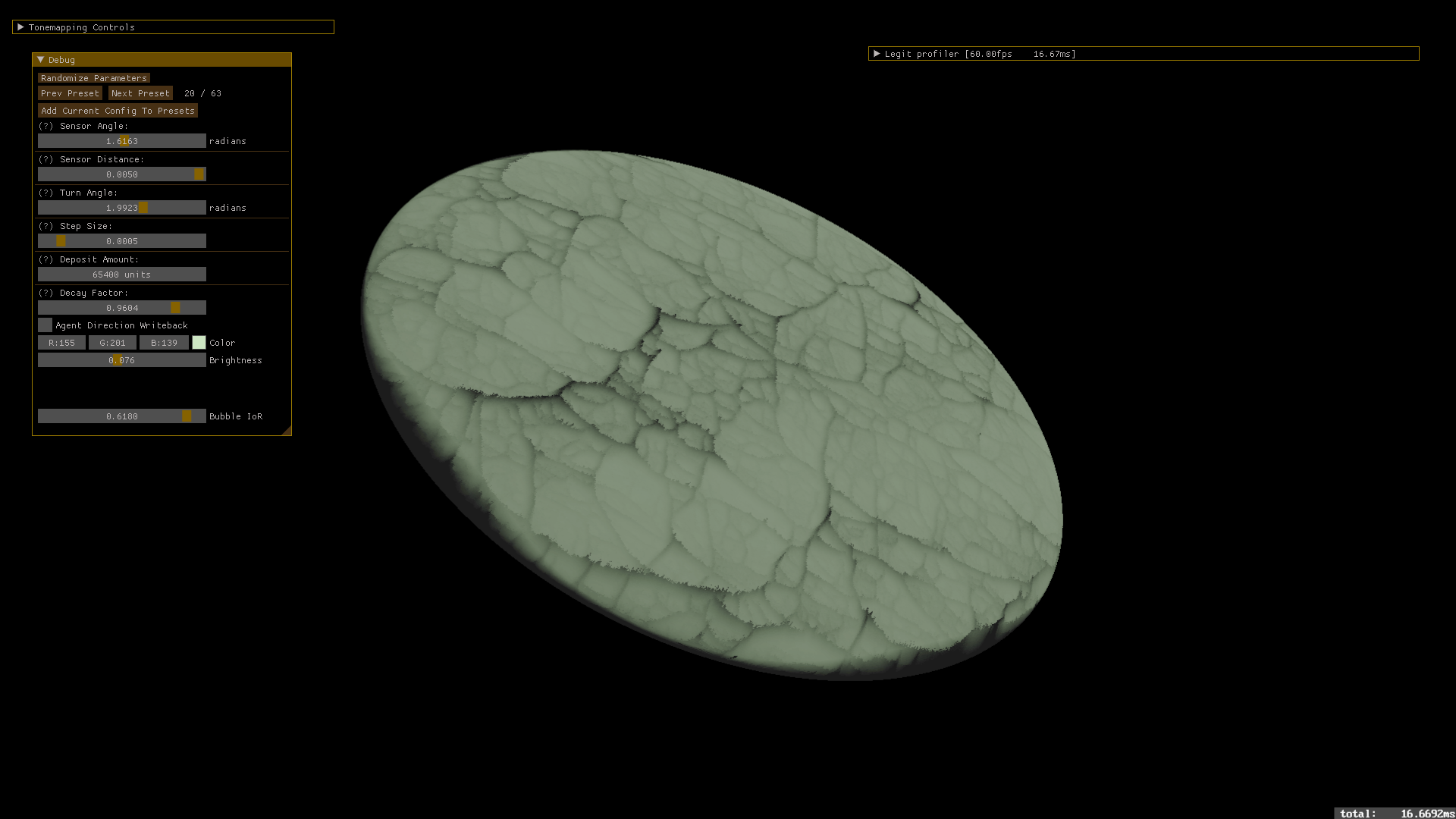

Something else I tried, and found a lot of success with, was putting this AABB test after an initial refraction through an ellipsoid sized to be right about the same size as the AABB. This creates this glass type bubble around the AABB, and because we can do that sampling process outlined above from whatever direction, we have no issues, those rays transform, and as far as the math is concerned, they are intersected as if nothing had happened. They will just intersect in different places, and from different angles, due to having passed through this curved surface, refracting with some user controlled IoR. Traversal length still sets the depth – it’s really something to watch it update live – the moving patterns under the curved refractor create very diverse patterns, depending on the simulation parameters.

Speaking of simulation parameters – that was another addition in this version – parameter presets, which were kept in a separate json config file, and applied to the three jbDE projects that played with this 2D physarum sim. The interface let you generate random parameters, iterate through the list of presets, and add your current config to the list of presets.

Something else new about jbDE – because it’s so little code to fork off a project, as a snapshot of the development at that point that I can return to, I can add a copy of:

- The entry in CmakeLists.txt

- The main.cc file + whatever dependencies created for the individual project

- The shaders associated with the project

- Some small path changes

And I have a copy of that project, alongside the version that I intend to keep messing with. Due to the fact that the application class inherits from the base engine class, I have a minimal amount of code to manage. Spinning up a new project “from scratch” involves doing this, from the application called engineDemo, which basically puts some data into the HDR accumulator buffer that feeds the VSRA postprocessing and output pipeline.

I did briefly try to make 3d physarum happen. I tried a couple different arrangements of where the "eyes" were, but didn't have any luck. A couple kind of marbled looking blocks that I only ever actually ended up visualizing in Voraldo13. I'm not sure why, but it didn't behave like the 2d sim, at all. Probably something I was not approaching correctly, logic error somewhere maybe - none of the simulation presets did anything interesting, and for basically every randomly generated config, I was just seeing random dispersal, no interesting patterns.