Text Rendering

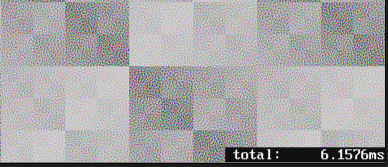

First things first, I have to give credit to the source of this idea, Jazz Mickle - they have a cool writeup on this technique that makes it very easy to understand, and I found a lot of cool things I could do to extend the basic idea. I plan to use this as a component of the engine, NQADE, eventually to create a guake-style/idTech-style in-game console, a dropdown overlay which would allow for inputting commands directly. First usage is a small overlay in two layers, creating a ms-per-frame display in the bottom right corner like you can see above. The layers are basically just something I was messing around with in order to render more complex graphics with these primitives, wherein I would disable depth testing and allow the updating of pixels which were on the font glyphs, as you will be able to see in the images on this page.

This ends up being a pretty cool little environment to play around with, and I made a little text graphics "game". It's basically just creating a landscape with FastNoise2, and then showing the player's position relative to the landscape as you move around, doesn't really have any game systems beyond simple collision testing with the landscape. The lighting was originally going to be done with the symmetric shadowcasting algorithm, as described here, but I didn't have success with that and instead was messing around with a stochastic ray-based scheme that would maybe work with some filtering.

You can see the effect of the layering here, as there's about a half dozen distinct layers being used - there are a few layers containing the lighting and other elements of the landscape and background, then the player is it's own layer, then the scroll characters and the partial fill background characters to create some basic interface elements, and then the remaining one layer that has random data and updates a random 10 or so characters per frame, at 60fps. Full 8-bit per channel rgb coloration per is possible for every character in every layer, as an extension of the logic that the original implementation used for the data texture.

How It Works

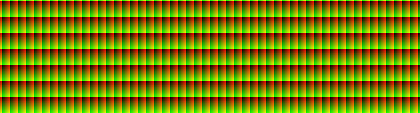

One of the important initial assumptions is that we're using a bitmapped monospaced font, where every font glyph is 6 pixels wide and 8 pixels tall. With this in mind, we create a buffer that we call the data texture, which keeps 4 values per "cell". If you imagine the screen broken up into spreadsheet-like squares, each one of these cells contains one glyph. This means that the data texture is stored at 1/6 the horizontal resolution, and 1/8 of the vertical resolution, rounded up.

Each texel in the data texture informs how the output model is constructed: r,g,b define the full 8-bpc color and the value in the alpha channel, 0-255, is an index into the font table. This index tells the rendering shader where to be sampling from on the font atlas texture for that cell. This data texture is unique for each layer, which allows for different characters of different colors to be used each time a layer is composited over the last.

Contained in each of the cells is a small UV ramp - note that there is a 1:1 mapping between this image and the one previous - you can see how each cell has a distinct ramp so it can sample the correct texel on the corresponding font glyph. The UV value gives a sampling offset from the base point in the font atlas, which is computed from the palette index in the alpha channel of the data texture. You can see here how we get the mapping from cells to texels in the data texture, and why the data texture has so many fewer texels than the texture that we use to show the output. This has a number of benefits, perhaps chief among them that it is incredibly cheap to update the low resolution data texure with new data from the CPU, since it is not more than a couple hundred pixels on either axis.

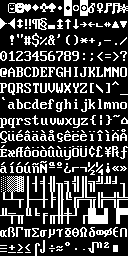

And as you can see here, we have 0-255 unsigned 8-bit extended ASCII table in an atlas texture that just lives on the GPU. This is the actual atlas texture that the program uses. You'll see that it includes many more glyphs than the base ASCII set, most notably the layout characters to represent borders and dividers in text-based user interfaces, while still retaining easy compatibility with C++ strings by using the signed value of a string's chars. This makes it very straightforward to use standard I/O functions to create a layer which would print actual, meaningful strings into the buffer. An example of this is the timing overlay in the engine I showed earlier.

You can see here a real example, a frame breakdown for the textmode game I showed earlier. It goes through a process of compositing 5 total layers to produce the output. For each step, the data texture is shown at at 1:1 scaling, above the resulting output texture after that pass completes. Note that the alpha channel on the data texture contains the character ID, so it does have varying opacity. Note also that the size of the buffer and the data texture do not need to match - buffers can be whatever size and offset on the screen makes sense for the situation. You can see the relative resolution of the data texture to the effect onscreen, since each pixel becomes a 6x8 pixel glyph - hence also why there is the squish to the shape of the drawn buffer, as they are displayed at about a 1:1.5 ratio.

Step 1

Starting with nothing in the buffer, we first add the light as a base layer.

Step 2

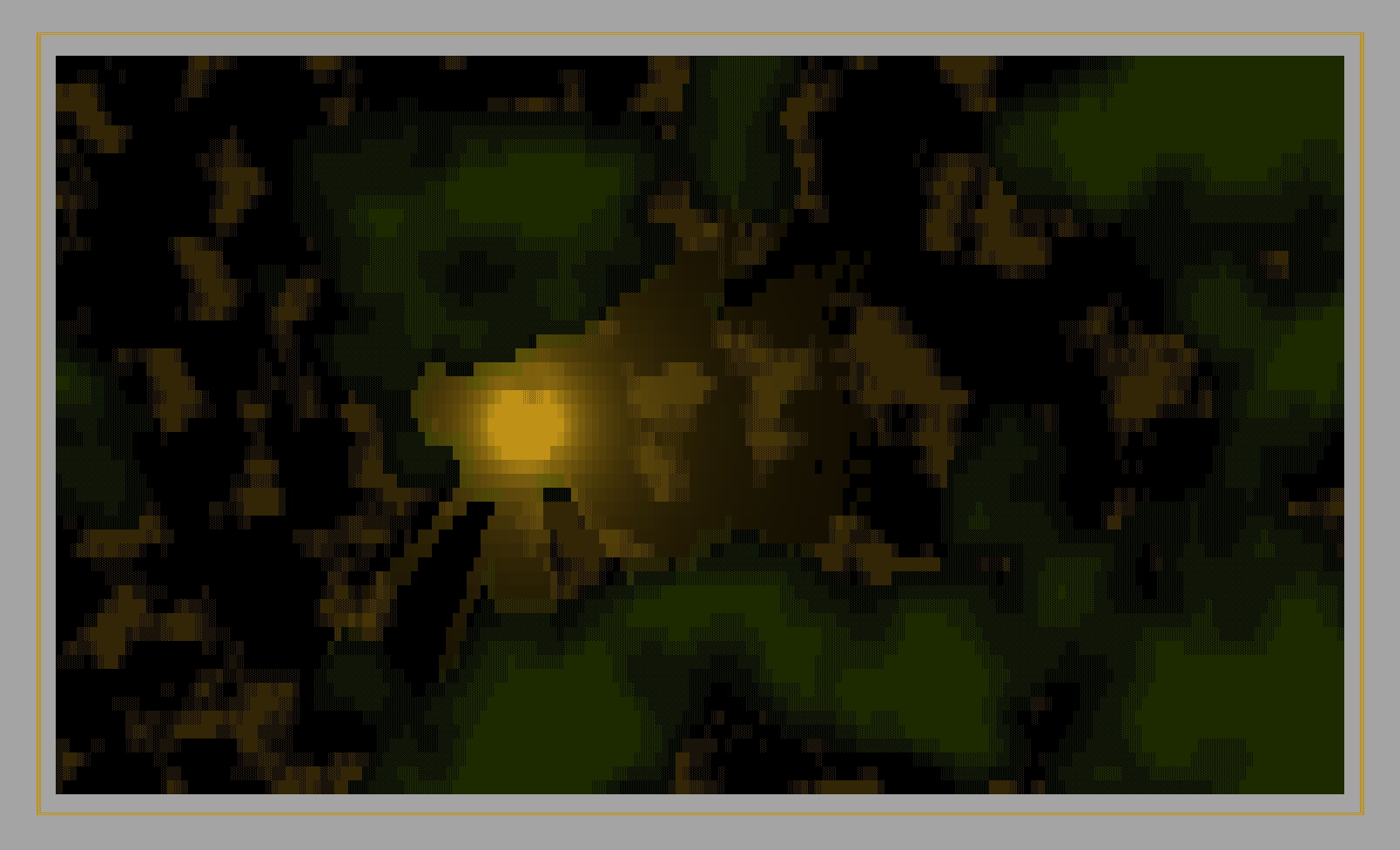

Over top of that goes the landscape and etc, which also reuses the data from the previous layer, to accentuate the result on the landscape objects. The higher frequency noise was something I was going to mess with to do lower objects, which could have shorter shadows, but I did not get very far with that. Maybe come back to this someday. Note that this layer now begins to interact with the data already in the buffer, since it uses partial fill characters from the atlas. Note also that this does the double gold border characters surrounding the display.

Step 3

This is the layer that contains the character sprite. I was thinking this could also contain other characters and interactable objects in the world, but right now it just has the one character drawn at the center. Data texture is hard to see, since it's a single white pixel with a very low alpha value ( 2 / 255 ), located towards the center ( offset a bit to the left so that the menus would fit and everything would feel more visually centered ).

Step 4

This layer contains the drawing of the scroll - this is the sort of background for what might be an in game console, if you imagine something like what runescape or WoW do to show world events. This again makes use of partial fill characters to show bits of earlier layers through. I also considered a per-layer opacity for things like this, where you could pretty easily make passes like this have some more complex interaction with the earlier layers via alpha blending. You can see here the benefit of only drawing the characters that you need, using a smaller texture at an offset location, since this is only updating a portion of the screen on the right hand side.

Step 5

This is the layer with the randomized data to fill that scroll area. This can be made to display meaningful strings, but here is just randomizing all 4 channels, so it's got both a random color and characterID each time it is updated.

Future Directions

So you can kind of start to see where you can go with this as a rendering method. It's very fast, and scales to many layers easily, even though you have to upload the data texture for each layer, each frame. I think there's a lot of potential for a system like this as a primary graphics display. This textmode game was a cool little thing to mess around with, and the space game idea I've been kicking around might be able to make use of this concept for a landing on planet sort of gameplay. I think that given how easy it is to produce compelling images with a couple layers of this stuff, this could really be something interesting. Something like that could also randomize the noise generation and color palette at each planet, to create a unique character for each place you would land.

In any event, it is being used for that timing display on the engine for the forseeable future, and I am looking for other applications for it. I think some simple graphing utility would be cool to show CPU/GPU usage over time or other program stats at runtime. Compatibility with existing string handling is big, in order to nicely format the output for these kinds of things. You could even do more complex operations like raymarching or other kinds of 3d rendering, writing a result into the data texture on the GPU, if you wanted to scale this up and do more interesting things with it.