Vertexture2: Point Sprite Sphere Impostors

Background

jbDE has evolved somewhat from where it started. Initially, it was the same copy-the-template-and-start-writing-your-project type of affair as NQADE. I started noticing a pattern, which kept repeating itself - I would copy the template, begin work on a project, and through whatever sequence of events, move to other projects. As changes happened to the template, it became increasingly difficult to maintain the older projects, as they had effectively started from snapshots of the template from the past. Incorporating the old features was not something I could do by just applying a git patch, it often became quite involved, since so much of the template was hacked up to make the particular project that was using the template.

So I got thinking solutions. What would make this easier, what would make my life easier in maintaining these projects, so that I can come back to them when implementing new engine features, and be able to extend the existing code to make use of them? This has been something I have been brainstorming for the past several months, and have finally been able to put together a proof of concept. The new structure defines a base engine class, with utilities for window creation, imgui setup, etc, all the things the template had. But, now, instead of being just the framework for the application, I can write individual main functions for each child application - these instantiate a class which inherits from the base engine class. Also, there is now a space for parsing command line args, and then it enters the main loop. The high level structure of the engine does not really change, only where the main loop and related functions are defined. The pieces which are fairly uniform across all applications exist in the base class, to reduce noise and overall redundancy, and the application specific code goes in the child class.

This actually went very smoothly. Adding multiple targets to the CMake config and the build script makes for identical usage during the build process - only now, instead of creating one exe in the bin folder, it now creates several named executables. Each of these is one of the child applications which are built as part of jbDE. I have implemented three such applications so far, the existing engine demo, which just puts some stuff on the screen to show signs of life, a reimplementation of SoftBodies, and now this project, Vertexture2. By being able to share most of the dependencies, each additional application really only adds a few seconds to the total build process, and each does not need to be updated unless a change was made in their particular bit of child application code.

All this is to say, I will be able to easily return to any one of these projects at any time - by automatically keeping the base engine code in sync, I am able to use any new piece of functionality in the engine for any of the child applications. This may not sound like something that scales well on the face of it, but now having implemented several of them, I believe that it will extend to supporting at least to dozens of projects. For my purposes, the free maintainence is much too large of a benefit to be ignored.

Vertexture2

All the above is just to give you a bit of context for how this project was built, and the circumstances around why I selected this as one of the first projects to be implemented as a child application. Vertexture was the first major interactive computer graphics application that I implemented during my CS4250 Intro to Interactive Computer Graphics course. I recorded several videos just going through and playing with the existing functionality in Vertexture before beginning on this project, so that I have something that I can refer to if I don't want to get into the process of getting a GLUT application to run on new machines. The project did lose focus a little bit, in favor of experimentation with what I thought was a new technique, but soon discovered had been previously explored by some people at Nvidia for screenspace rendering of fluids.

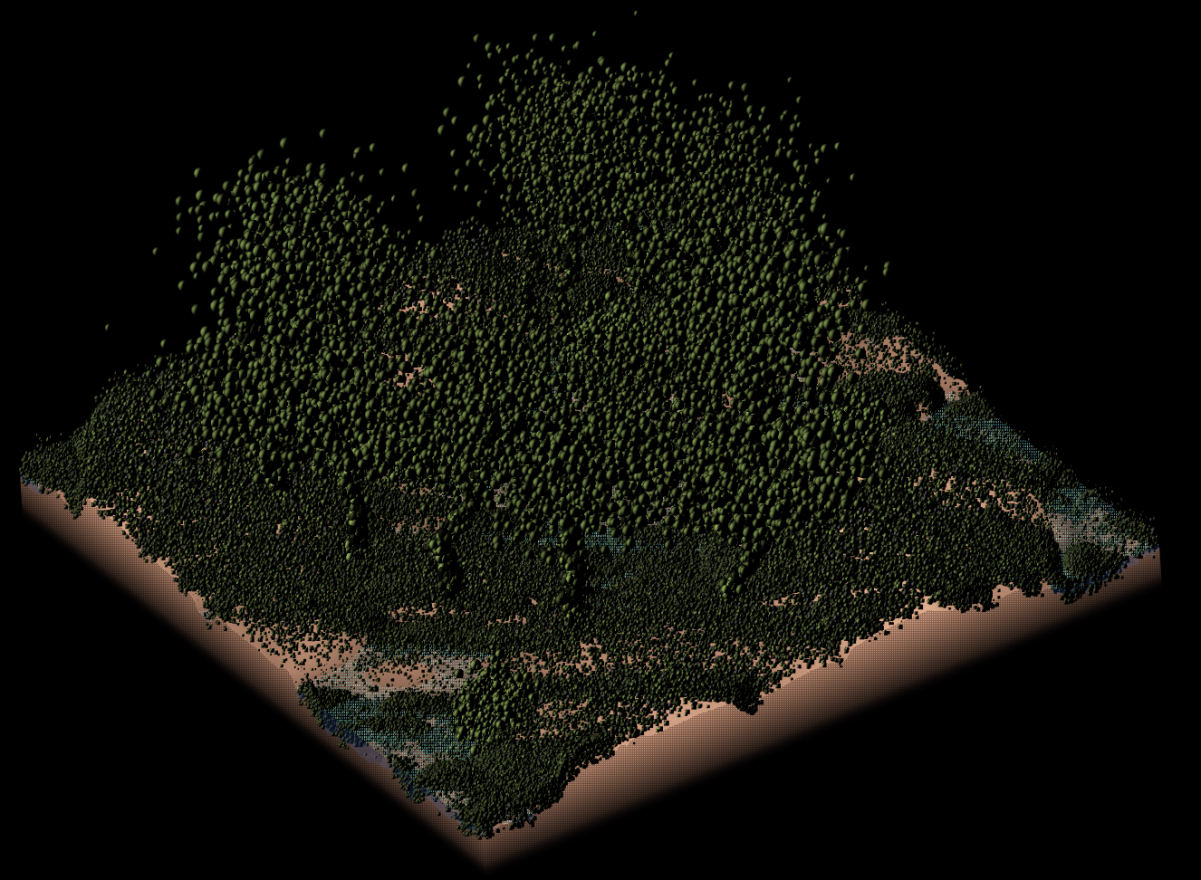

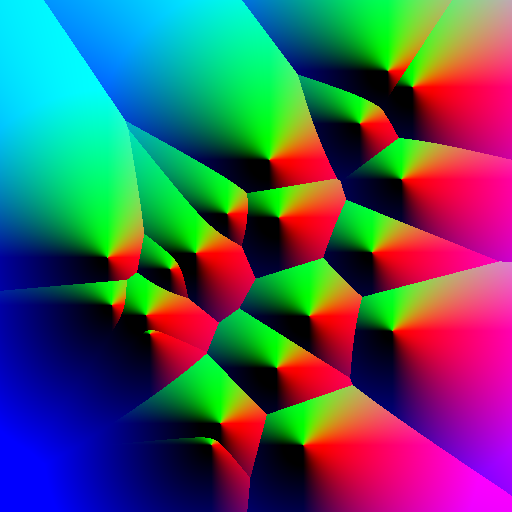

The core concept has not changed from the original implementation in Vertexture. By mapping the above image onto an API point primitive, I am able to discard the black parts, and end up with a circle. However, a point is drawn with a uniform depth value for the entire primitive, by default. This project began to explore the idea of manipulating the depth value written by the API, so that these little sprites could in fact act as spheres, in terms of correctly z-testing and occluding one another, having correct normals, and being able to shade as if it was a high resolution sphere primitive.

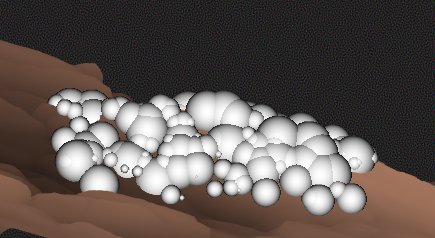

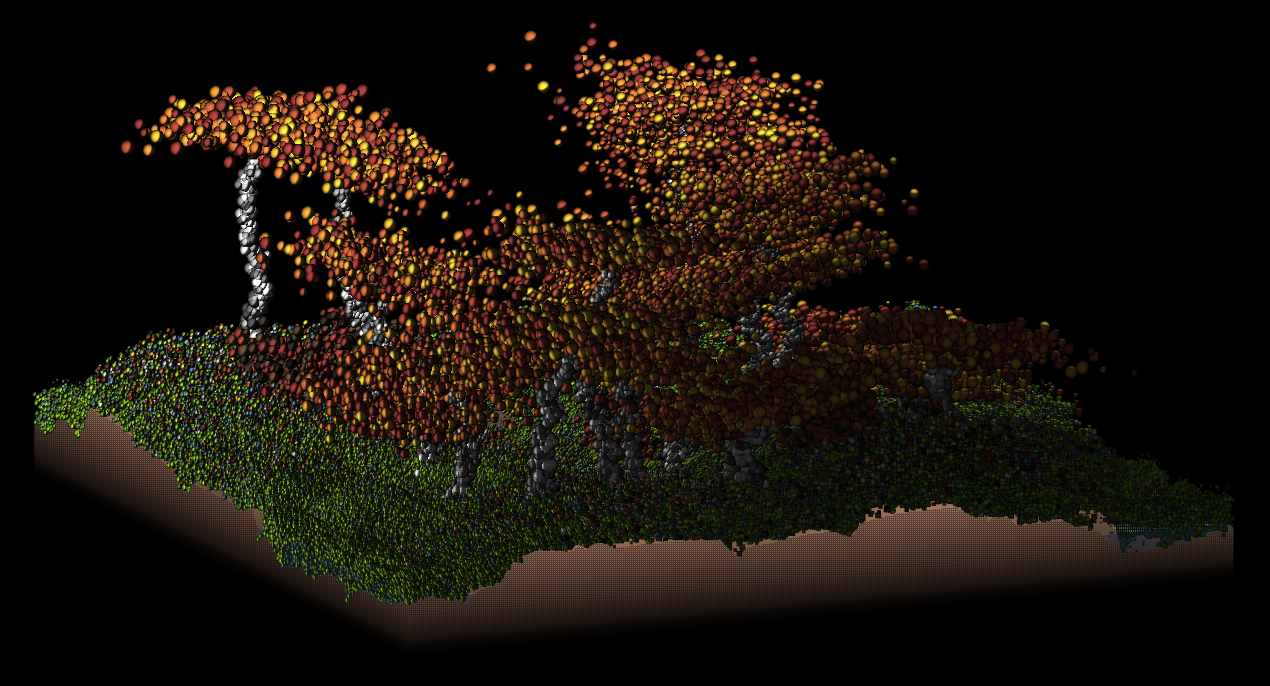

Shown here is the first example I had of correct depths being written. I will go ahead and say - orthographic projection lets you get away with murder. It was completely trivial to implement this, by knowing three pieces of information. First, and this was already being read anyways, is the heightmap read from the point sprite. This gives us a value in the range 0..1, indicating the normalized depth for that texel on the (hemi)sphere. Because the UV ramp provided by gl_PointCoord is also in the range 0..1 on each axis, we end up with a very easy to calculate offset for the depth. Knowing then the width and aspect ratio of the screen, we can scale this depth offset, using the per-primitive point size ( with GL_PROGRAM_POINT_SIZE enabled this can be a vertex attribute or something stored in an SSBO, used to set glPointSize in the vertex shader ). Bringing these things together, you can write to gl_FragDepth, offsetting the value coming in from gl_FragCoord.z.

Something that I thought was quite interesting - this also extends pretty trivially to do correct normals, so I can shade each point sprite sphere impostor as if it was in fact a small sphere primitive. By applying the correct rotation to counter the view transform, I am able to get global normals very easily. The initial value of the normal comes from the (hemi)sphere texture, again, using the normalized depth value to tell me the z component, and the point sprite UV remapped from 0..1 to -1..1 to give me a point on a unit sphere. Because it's all normalized, the fragment shader can be very simple, and just use a couple of passed in scale factors. Having correct worldspace normals allows me to do basically whatever shading I want on them, like the very simple little bit of directional diffuse in the image at the top of the page.

Another thing I want to try, only a hop, skip, and a jump away, is using this normal vector to reference a texture map. Because the default way you reference a cubemap is with a direction vector, I think this would be a pretty natural extension to this method. There could be some offset rotation applied on a per-sphere basis, to break up the uniformity. I also think making the point size oscillate a little bit with a sinusoid would make a lot of sense, as well. Additionally, introducing cubemap reference to the mix could be a way to experiment with image based lighting, which I have not really investigated very much before.

Revamped Simulation on the GPU

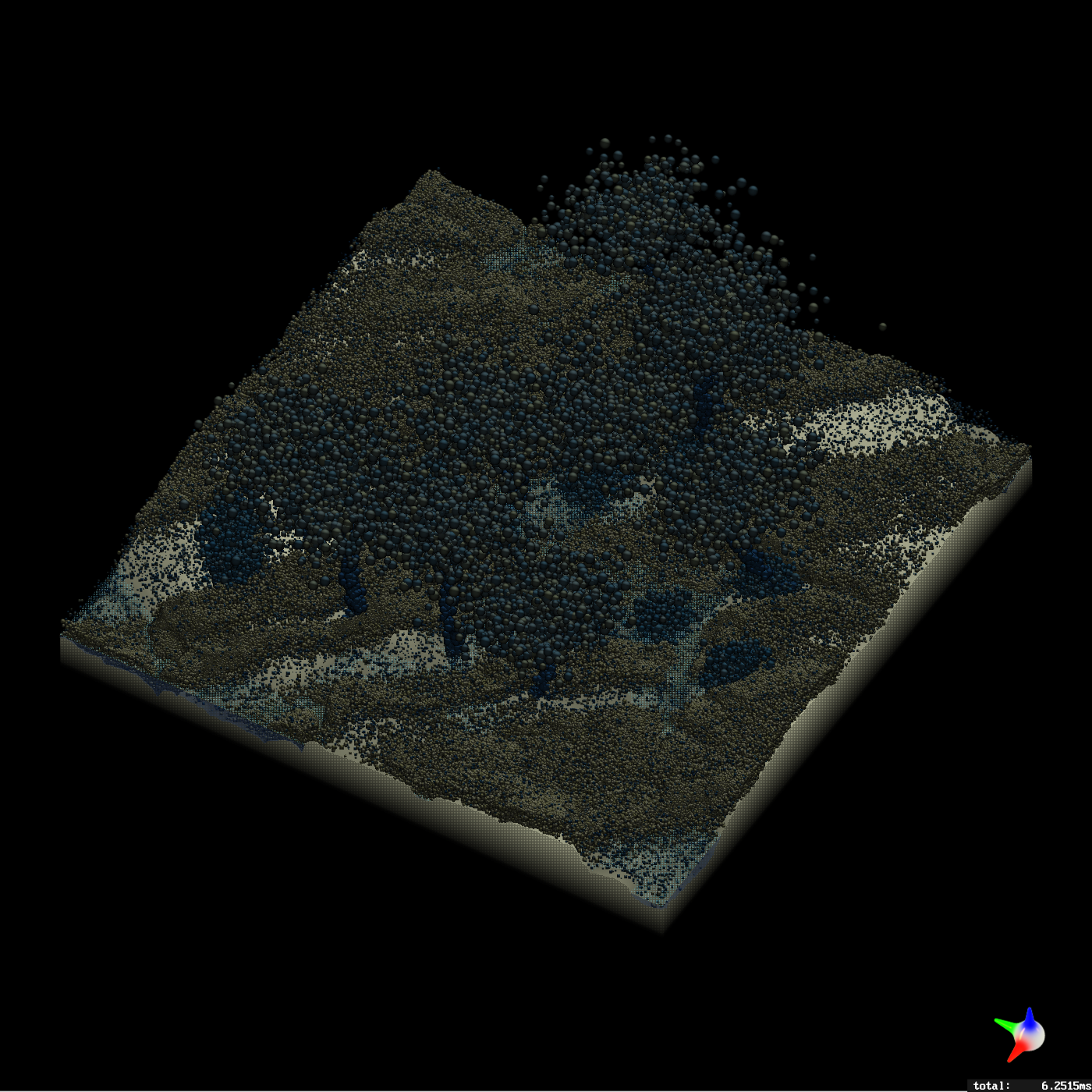

All the movement logic is now implemented on the GPU. The point locations, elevations, radii, colors, etc for all movers are all kept in a big SSBO, with a set of compute shaders to update their position and the simulation state. In the old implementation, I did all this logic on the CPU as a single threaded update process. That approach capped out at a couple thousand sim agents because it had to iterate through some lists in a particularly inefficient way - I have moved the simulation to the GPU, where it only requires a couple of texture reads per agent and now it can easily handle into 20+ million territory at 60fps.

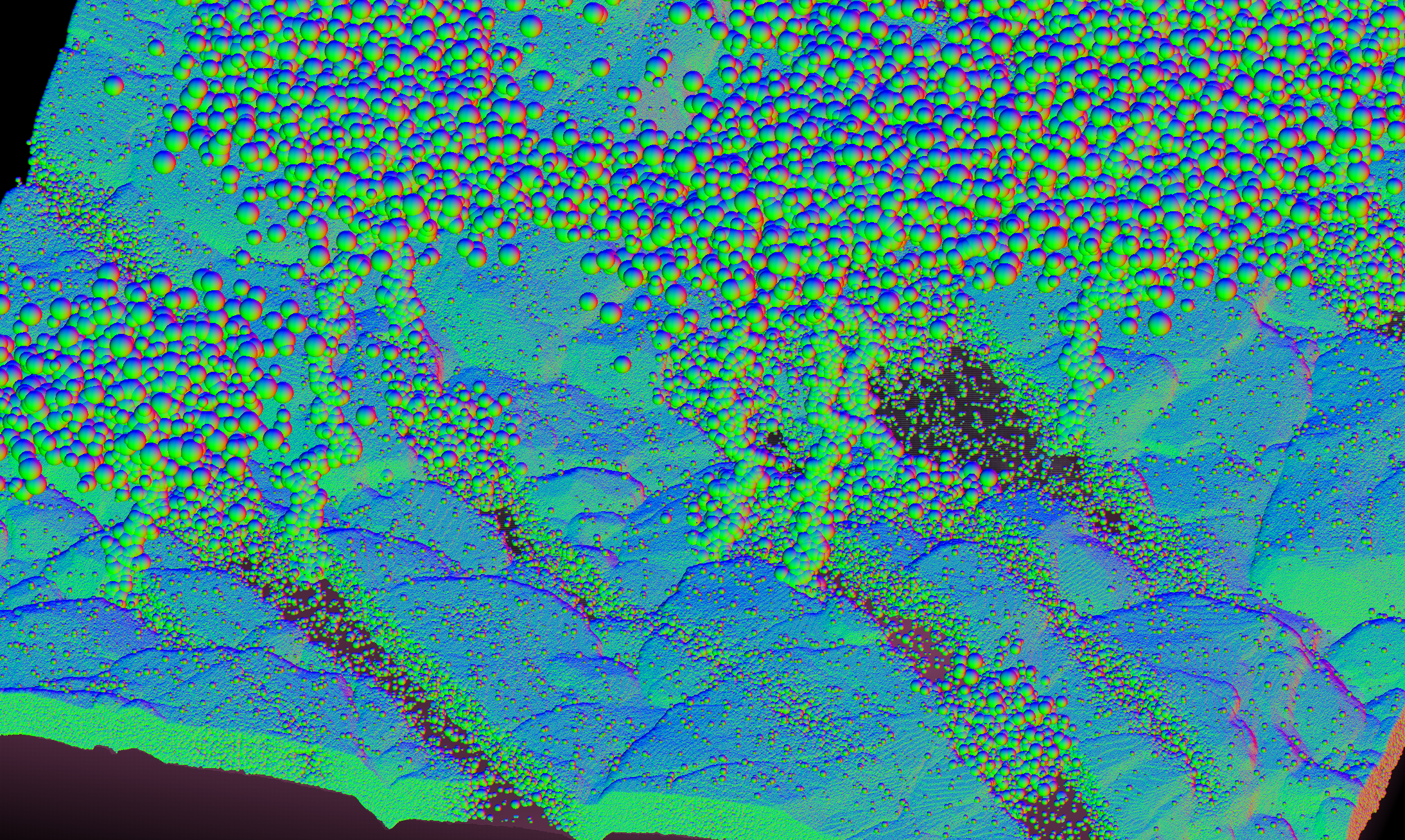

It lacks the interactivity of the original implementation, but that's probably something I could figure out in the future. Currently, there's some obstacles placed in the same manner as what the original implementation did - except this time, they are of variable radius, and the distance/direction to closest obstacle is computed for every pixel in a map which is then read by the simulation agents. You can see this below - the output and the corresponding sim map - using the red and green channels to indicate direction to the closest obstacle, and the blue channel to indicate the distance, which goes to zero at the radius of the obstacle. By doing this, it is one texture read to get the relevant simulation data, without having to iterate through the list of obstacles for every sim agent as was the case before. Another thing I experimented with was setting the movement speed from another map which keeps sort of a steepness metric by looking at range of height in a small area around each pixel.

Lighting

The cool thing about having this extra data with the normals and depth is that you can do lighting calculations like you would with any other raster data. Instead of being a flat quad as you would typically get from the API point primitive, you have something that behaves for all intents and purposes like it was a sphere. You can see below some simple phong lighting with a point light.

Doing more advanced lighting on these is definitely possible - I attempted to figure out how to do shadowmapping, since I have correct depths - I think this is viable, I just need to figure out the graphics API plumbing required. I was getting some state leakage using a separate framebuffer, I think basically I was using the same shader to write both views, and it was writing color to the main color framebuffer. I will need to pass in a flag to change this behavior or just have a different set of shaders for rendering depth-only vs doing the rendering and shading code. Because it needs to do the shadowmapping logic, it's probably different enough to justify doing a separate set of shaders. This is something that I will return to, I have been trying to go through and do some of these more canonical rasterization approaches like my recent experiments with normal mapping.

Conservative Depth Optimization

I have not done proper testing to compare the performance delta using this optimization, but I am pretty confident that there is at least some improvement. Conservative depth basically makes the promise to the graphics API that if you touch the API depth result, you will only be increasing the value. When I initially implemented this, I subtracted my offset from the point primitive's depth to get correct depths in the fragment shader. I don't have a lot of insight on what's happening under the hood, but my understanding is that if I offset the z value of the primitive in the vertex shader, and then add by one minus the offset I had been using before, I can make this promise, that the depth will only ever be greater or equal to the point primitive's depth. Again not sure how significant, but my understanding is that it is a best practice if you do touch the API depth result.

Some More Engine Stuff

This new engine structure in jbDE is working quite well, for the first three projects that I have implemented in it. I have some issues with managing my startup timers with the new project structure. I will have to figure out as time goes on, basically ownership of the timer stuff because I'm not getting correct behavior having static variables in a header. This really only affects the startup timing, my per-frame timing seems to still be working correctly. Basically just need to trace through what's happening and fix it. I am excited to be able to return to these projects and extend them whenever I want with newly implemented engine features, I think this is a big move in the right direction.