Siren: Another Foray into GPU SDF Pathtracing

Once More unto the Breach

This is a new pathtracer project that I intend to use as a way to experiment with more advanced techniques. A friend shared this resource with me, the Global Illumination Compendium, which I want to go through and learn more about. I also want to go through the book Physically Based Rendering: From Theory to Implementation and I think it will be helpful to have an extensible testbed for these techniques.

SDF_Path2 got too complicated and didn't have nearly enough planning in terms of architecture - just getting back into working on it after some time away had a large amount of overhead, figuring out what the hell was going on with it. There were also significant issues with it becoming unresponsive when the tiled update dispatched too many compute jobs per update. The renderer theoretically scales up to the maximum texture size allowed by the graphics API. This project picked up about where the first part of that project left off - before getting into the refraction stuff that that project was doing.

I also redesigned how the tiles were dispatched - now using OpenGL timer queries between each, to make sure that it didn't continue to dispatch jobs after the intended frame update time was exceeded. The goal is to maintain 60fps updates, or at least responsiveness in the imGUI interface when the pathtracing shader becomes heavy. Another aspect that I may investigate to get finer control over timing, is the use of the method outlined in this shadertoy example. This method spreads out the update of each tile, amortizing it across multiple dispatches. This is achieved by spacing out each invocation by some N texels on x and y, using an N by N Bayer pattern as an execution order for the updates within the N by N neighborhood.

For example, as shown in the above shadertoy listing, the following shows one possible order of the offset updates in a 4 by 4 neighborhood. This could even be extended to use larger Bayer patterns for larger neighborhoods, where a larger neighborhood means that the full update takes place over more successive dispatches:

const int bayerFilter[ 16 ] = int[](

0, 8, 2, 10,

12, 4, 14, 6,

3, 11, 1, 9,

15, 7, 13, 5

);

But to be perfectly honest, I don't think this is going to be super useful. It remains to be seen, but maybe as a way to reduce the amount of work done per dispatch. I have an intuition that this might not be super friendly for caches, given the spacing between neighboring invocations' pixels, but I have no real evidence for or against it. It would also somewhat complicate the averaging of pixel samples, but I think it's something that's managable with the current averaging solution.

There's a material system implemented in the pathtracing shader which, among other things, will allow for multiple different dielectric materials, assuming they don't overlap - nested dielectrics is a whole can of worms to itself. I haven't gotten to testing the use of refractive objects yet, but it works well for distinguishing between different diffuse, specular and emissive materials so far. So far, I have a few very simple materials implemented, in the style of my previous SDF pathtracers.

The project keeps a few different buffers for rendering - currently they exist as two RGBA32F accumulator buffers, way overkill for the application, and one unsigned RGBA8 buffer for presentation of the data each frame. The accumulator buffers are updated in tiles of configurable size, processed asynchronously from the frame update. The tiles are defined by an array of glm::ivec2 values, the order of which is shuffled each update. The first of these accumulators holds RGB color in the first three channels, and the alpha channel holds the current sample count for each pixel. This takes care of the need to do any further synchronization with respect to the sample count. The second accumulator buffer holds the XYZ normal encoded in the first three channels, and a depth value held in the fourth channel. The presentation buffer takes the final image result, after tonemapping and other postprocessing and allows it to be shown on the screen. This will also afford a chance to mess with denoising methods, since normals, depth, and color values will all be available at this stage.

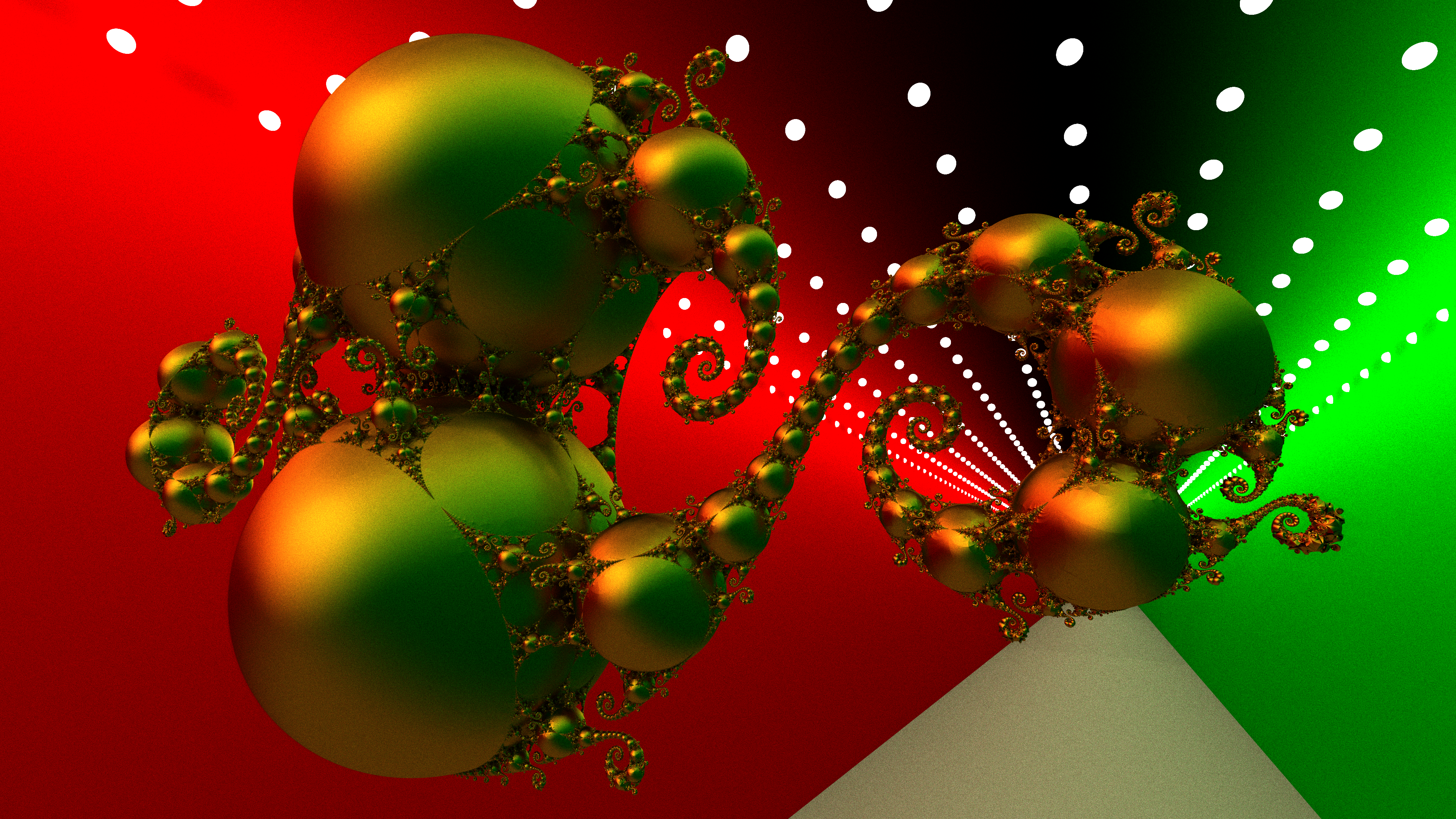

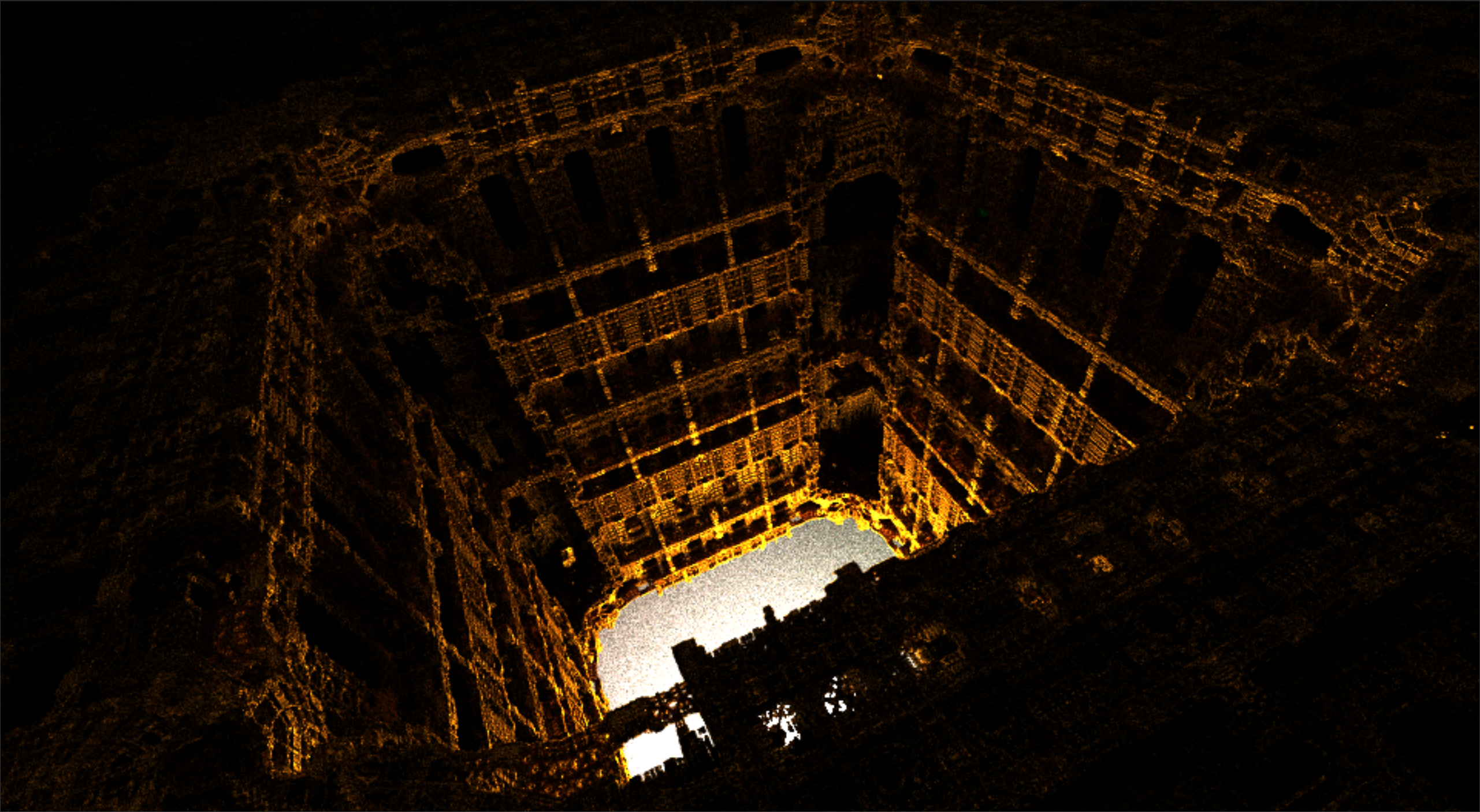

As you can see in the images here, I've been able to use a very small epsilon value during the raymarch loop - this allows the renderer to resolve very fine detail on the surfaces of objects. I've also discovered a method which is apparently common knowledge for a lot of people in raymarching circles - namely, understepping, to compensate for fractals which are not Lipschitz continuous. This method changes the operation performed in the raymarching loop - instead of taking a step size defined by the return value of the distance function, a scale factor (usually less than 1.0, I've been using 0.618 for golden ratio cool points) is applied to the value before using it to increment the total distance traveled along the view ray. This compensates for artifacts that come from broken distance functions, at the expense of more steps needing to be taken along the way to a scene intersection.

Something I'm trying this time around is packing the values into structs that organize the parameters by category, host, core and post. It simplifies CPU side handling, as there are only three member variables that have to be held by the engine class, and the dot operator is used when creating the imGUI controls. There's a good chance that this will make sense to send as a uniform buffer or SSBO. They contain the parameters for the renderer and postprocessing and the layout and types of these parameters is static, so it may make sense to pack them prior to sending to the GPU, in place of sending as individual uniforms.

More updates on this project will be forthcoming. The next thing I want to get working is at least a simple demo of mutiple refractive objects with different indices of refraction per object. My recent experiences with photography have gotten me very interested in the behavior of lenses, and how they can create changes in the bokeh, depths of field, and many other aspects of the produced image. I want to look into parameterizing the camera by focal length and f number, and to mess with emulating physical optical assemblies along the lines of these works by Yining Karl Li.