Model Loading

I had included tinyOBJLoader in NQADE before, but it was during the time when I was going through the tinyRenderer tutorial series, and I guess I had an older version which was from 2019. I looked on the repo and it didn't even have documentation for the callback-based interface that I had used the last time I had used the library. Anyways, the new interface is much, much nicer, and makes loading multi-material models much easier than I had anticipated.

What it provides is basically a really nice interface for OBJ loading, where you can do some nested loops, where the outer loop is the mesh, the middle loop is the faces in the mesh, and the inner loop is the vertices in each face. It provides an API to find out if various vertex attributes exist, and get their values if they do. It's very easy to determine which material needs to be applied to each face, and to get the texcoords associated with it. I was surprised at how easy it was to use, given my previous experience with the library.

I was also looking at assimp, which is a similar wrapper, but allows a much wider range of input formats. I got as far as including it in NQADE and getting it to compile, but didn't get much further into testing it than that. It massively increased the compilation time of the project, so I may or may not carry that forwards - need to figure out and evaluate the actual loading API against what I have now before making a decision on that. I have also been looking at some resources for loading GLTF, like tinygltf, because that's a more modern format that things like the new Intel Sponza model are being distributed in.

Multi-Material Models

This had been a sticking point before I had a good method to manage images in NQADE. The image wrapper lets me load all the textures that are specified in the model file, and keep them in an indexed array. I was experimenting with the old version of Sponza, which I got from here - it has 42 textures included ( a couple missing, vertices with materials that did not include any textures, I didn't investigate too closely ), so it requires that you come up with some way to handle the different materials in order to draw the textured model. It comes with both diffuse and normal maps.

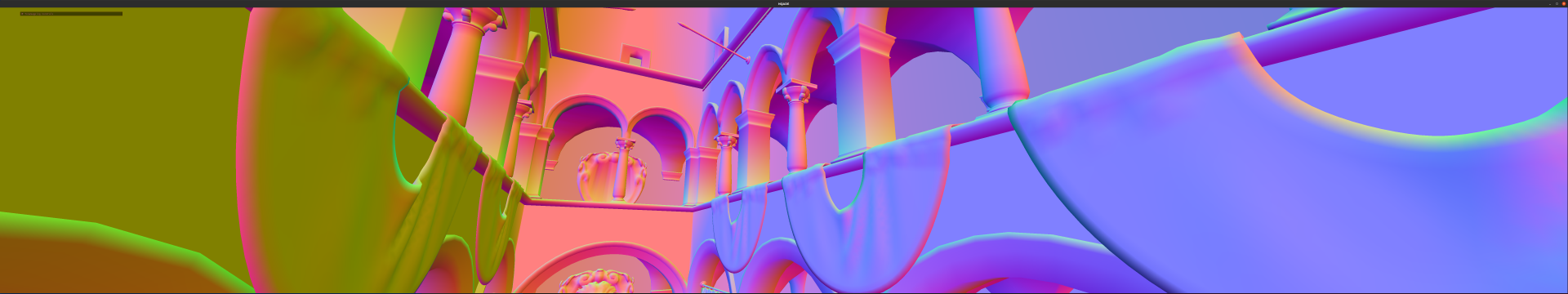

I had the idea of packing all these into a big array texture and using the third coordinate of the texcoord as an index into the array. This worked really smoothly - by using a stride of two, I was also able to keep the normal maps in this same array. This comes with the requirement that the resolution of all the textures in the array are the same resolution - some of the textures in the model are different dimensions, and the one for the chain is non-square. My solution for this was to scale all the square textures to the maximum dimension ( using stb_image_resize, which is an absolute godsend, and has been integrated into my image wrapper ). For the non-square texture, I just went ahead and made it square. Because the texcoords on the model are normalized, this just means it's taking up a bit more GPU memory, and full compatibility is maintained. This is the diffuse color only:

Normal Mapping

If you've ever touched computer graphics before, you know that the normal vector is very important for a lot of purposes related to lighting calculations and other surface properties. It's common for vertices to have normal vectors supplied, in the same way that you would have vertex colors or texcoords. But that has limited detail - this is what that looks like:

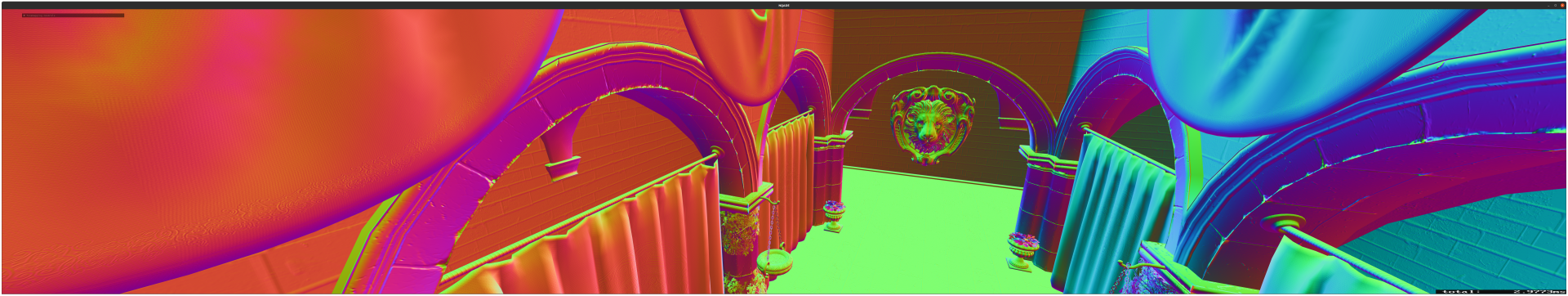

A lot of realtime applicaitons make use of what's called normal mapping, that is, encoding the surface normals into RGB data which is mapped to each surface like a texture. This however requires some more information about the surface - specifically, you need a set of basis vectors to interpret that three dimensional data from the normal map. This is the interpolated vertex normal, serving as the positive z axis, with the remaining two basis vectors coming from two vectors called the tangent and bitangent which are calculated during model load time and passed in as another set of vertex attributes. It is also possible to compute these tangent and bitangent vectors in place in the shader code, but I did not implement that. Once you have interpreted the value from the texture, you have the final worldspace normal for that surface. You can see how much detail that adds to surfaces:

This is common practice in realtime graphics, because of the amount of detail that it can add to surfaces without adding a crazy amount of geometry. In fact, it's common practice for these normal maps to be baked from higher poly representations of the same model. I haven't done much as far as lighting calculations on this project, but with the detailed surface normals and the diffuse color, you have a good start for a doing a number of different shading techniques.

Future Directions

This was an exercise in learning some raster graphics stuff. I had never implemented some of these more basic techniques, so it was cool to be able to see it in action. One of the other things I've never actually implemented is shadow mapping, which would be a good fit for a model like this. It basically involves rendering from one view to get a depth result for the nearest surface to the view plane, and then interpreting this data from the camera's view in order to get occlusion for the rest of the scene.

The model loading aspects of this project have a lot of applications. I have already used some of this stuff in Voraldo13, in order to do the mesh voxelizer. Being able to easily load meshes is another really useful tool for computer graphics, and I might look at adding a model wrapper around the tinyOBJLoader code in the near future, as this version was kind of bootstrapped off of my software rasterizer code.