2D Forward Pathtracing

This is a bit of an unfinished demo project with a friend who had been trying to do this on shadertoy. By rewriting it with desktop OpenGL, I was able to show him how to use compute shaders to make what he was doing massively more efficient, with one shader invocation per ray instead of the workarounds he was having to use to do it in shadertoy. It really is remarkable to see how quickly the caustics resolve now.

What is it?

It's a little abstract to try to think about what this is doing. When you go through Raytracing in One Weekend and you implement a program that generates a ray for every pixel, tracing that ray out into a scene and bouncing it around until it hits a light sorce, you are doing something called backwards pathtracing. This means you are making use of an approximation of the real-world behavior of light, and it's important to be mindful of what that really means. In the real world, a camera relies on the massive scale of the quantities involved - an immense number of photons are emitted from a given light source, enough to expose the image sensor properly.

When we are in a computationally modelled environment, we can't begin to consider as many rays as there are photons present even in relatively dim lighting conditions. Instead, we use this backwards recontextualization where we say, ok, looking outwards from a pixel, letting this ray bounce around until we hit a light, and then we consider the amount of light that could be transmitted through these bounces from the light source for the given path. This is much more efficient, in the context of 3d pathtraced rendering, than considering the naive approach of having the light sources actually act as sources that emit rays.

That's not to say that it's the only way to do it. In fact, when you consider the forwards-traveling rays, you can get into some major performance improvements via nearest event estimation, multiple importance sampling, and bidirectional pathtracing. In practice, these are a little beyond my current level of experience, but what we did for this project recontextualizes the problem of light transport into a 2d world, where we can see some very interesting behavior.

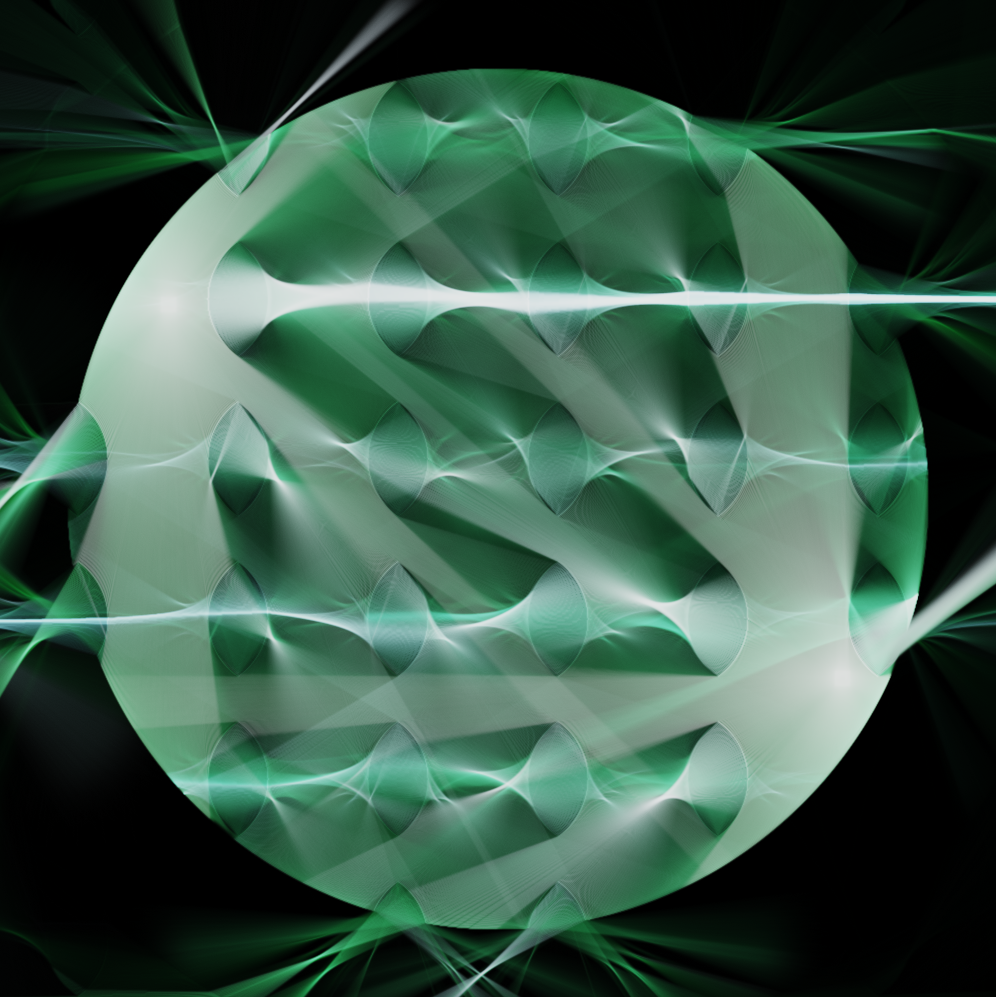

One of the key differences that is immediately apparent and very important to what makes this project unique is that the distribution of the rays in the renderer actually models the distribution that would be emitted from the sources. What I mean by this is, we can see these caustics - they resolve almost immediately - the focused, bright curves of light, in the space between bounces. Additionally, the rays are uniformly distributed in all directions from point sources - this allows for the rays to be gathered and focused into concentrated beams by transmission through interfaces with different indices of refraction, like these lens shapes. Caustics often take a very long time to resolve in your more typical backward pathtracing case - this is because you do not have this concentrated distribution where the rays are emitted from the light source, the rays are instead are distributed uniformly with respect to the view, which is not at all the same thing.

If you have ever done pathtracing before, the rest of the math is not complicated. It's the same thing you would be doing otherwise, but now, the rendering is done by incrementing the amount of light in buffers representing the amount of red, green, and blue light present at each pixel's location. This creates the very strong caustics along the paths where a large concentration of rays travels, in a matter of milliseconds.

This was a bug, but it looked kinda cool.

Future Directions

There are some limitations to be considered here, as well - atomic buffers can only use integer types without getting into vendor specific extensions. We had pretty good luck with integers for representing the light values, but wanted to try using floats for some more flexibility. We talked about doing a Compare-And-Store ( CAS ) loop to implement atomic writes when drawing lines along the rays' paths, but that hasn't happened yet, I got busy with other things.

Basically that process consists of loading a value from the buffer, which would be a uint that you would interpret as a float. Given this initial value, you do your math ( here just an increment by the current light intensity ) and you would write back, so long as the value in the buffer when you go to write is the same that it was when you initially loaded it. If the value has changed, you know another write has happened, and you need to consider a new value at that location before doing the write, so you use this new value from the buffer and repeat the process. This adds some overhead, in order to synchronize the writes to memory. I suspect that this kind of sync issue is where the black lines near surfaces are coming from, but I'm not sure. You have to look pretty closely to see them.

Caustics are a really interesting effect to be able to model. They can also be a component of the way lens flares are created - I have been working on Siren again to try to mess with more elaborate lens assemblies with multiple elements and see what kinds of cool stuff is possible with that. I think that something that takes advantage of this forward pathtracing logic with Voraldo could also lead to some very interesting results, extending these crazy caustics and other light behavior to 3D.